| Oracle® Database Semantic Technologies Developer's Guide 11g Release 2 (11.2) E25609-05 |

|

|

PDF · Mobi · ePub |

| Oracle® Database Semantic Technologies Developer's Guide 11g Release 2 (11.2) E25609-05 |

|

|

PDF · Mobi · ePub |

The Jena Adapter for Oracle Database (referred to here as the Jena Adapter) provides a Java-based interface to Oracle Database Semantic Technologies by implementing the well-known Jena Graph, Model, and DatasetGraph APIs. (Apache Jena is an open source framework, and its license and copyright conditions are described in http://jena.sourceforge.net/license.html.)

The DatasetGraph APIs are for managing named graph data, also referred to as quads. In addition, the Jena Adapter provides network analytical functions on top of semantic data through integrating with the Oracle Spatial network data model.

This chapter assumes that you are familiar with major concepts explained in Chapter 1, "Oracle Database Semantic Technologies Overview" and Chapter 2, "OWL Concepts". It also assumes that you are familiar with the overall capabilities and use of the Jena Java framework. For information about the Jena framework, see http://jena.apache.org/, especially the Documentation link under Quick Links. If you use the network analytical function, you should also be familiar with the Oracle Spatial network data model, which is documented in Oracle Spatial Topology and Network Data Models Developer's Guide.

The Jena Adapter extends the semantic data management capabilities of Oracle Database Release 11.2 RDF/OWL.

This chapter includes the following major topics:

Section 7.3, "Setting Up the Semantic Technologies Environment"

Section 7.6, "Additions to the SPARQL Syntax to Support Other Features"

Section 7.7, "Functions Supported in SPARQL Queries through the Jena Adapter"

Section 7.13, "JavaScript Object Notation (JSON) Format Support"

Disclaimer:

The current Jena Adapter release has been tested against Jena 2.6.4, ARQ 2.8.8, and Joseki 3.4.4. Because of the nature of open source projects, you should not use this Jena Adapter with later versions of Jena, ARQ, or Joseki.To use the Jena Adapter, you must first ensure that the system environment has the necessary software, including Oracle Database 11g Release 2 with the Spatial and Partitioning options and with Semantic Technologies support enabled, Jena version 2.6.4, the Jena Adapter, and JDK 1.6. You can set up the software environment by performing these actions:

Install Oracle Database Release 11.2 Enterprise Edition with the Oracle Spatial and Partitioning Options.

If you have not yet installed Release 11.2.0.3 or later, install the 11.2.0.2 Patch Set for Oracle Database Server (https://updates.oracle.com/Orion/PatchDetails/process_form?patch_num=10098816).

Enable the support for Semantic Technologies, as explained in Section A.1.

Install Jena (version 2.6.4): download the .zip file from http://sourceforge.net/projects/jena/files/Jena/Jena-2.6.4/jena-2.6.4.zip/download and unzip it. (The directory or folder into which you unzip it will be referred to as <Jena_DIR>.)

The Java package will be unpacked into <Jena_DIR>.

Note that Jena 2.6.4 comes with ARQ version 2.8.7 (arq-2.8.7.jar); however, this version of the Jena Adapter actually requires a newer ARQ version (arq-2.8.8.jar). You can download arq-2.8.8.jar from http://sourceforge.net/projects/jena/files/ARQ/ARQ-2.8.8/arq-2.8.8.zip/download and unzip it to a temporary directory. Remove the arq-2.8.7.jar file from <Jena_DIR>/Jena-2.6.4/lib/, and copy arq-2.8.8.jar from the temporary directory into <Jena_DIR>/Jena-2.6.4/lib/.

Download the Jena Adapter (jena_adaptor_for_release11.2.0.3.zip) from the Oracle Database Semantic Technologies page (http://www.oracle.com/technetwork/database/options/semantic-tech/and click Downloads), and unzip it into a temporary directory, such as (on a Linux system) /tmp/jena_adapter. (If this temporary directory does not already exist, create it before the unzip operation.)

The Jena Adapter directories and files have the following structure:

examples/

examples/Test10.java

examples/Test11.java

examples/Test12.java

examples/Test13.java

examples/Test14.java

examples/Test15.java

examples/Test16.java

examples/Test17.java

examples/Test18.java

examples/Test19.java

examples/Test20.java

examples/Test6.java

examples/Test7.java

examples/Test8.java

examples/Test9.java

examples/Test.java

jar/

jar/sdordfclient.jar

javadoc/

javadoc/javadoc.zip

joseki/

joseki/index.html

joseki/application.xml

joseki/update.html

joseki/xml-to-html.xsl

joseki/joseki-config.ttl

sparqlgateway/

default.xslt

noop.xslt

qb1.sparql

. . .

browse.jsp

index.html

. . .

application.xml

WEB-INF/

WEB-INF/web.xml

WEB-INF/weblogic.xml

WEB-INF/lib/

StyleSheets/

StyleSheets/paginator.css

StyleSheets/sg.css

StyleSheets/sgmin.css

Scripts/

Scripts/load.js

Scripts/paginator.js

Scripts/tooltip.js

admin/

admin/sparql.jsp

admin/xslt.jsp

web/

web/web.xml

Copy ojdbc6.jar into <Jena_DIR>/lib (Linux) or <Jena_DIR>\lib (Windows). (ojdbc6.jar is in $ORACLE_HOME/jdbc/lib or %ORACLE_HOME%\jdbc\lib.)

Copy sdordf.jar into <Jena_DIR>/lib (Linux) or <Jena_DIR>\lib (Windows). (sdordf.jar is in $ORACLE_HOME/md/jlib or %ORACLE_HOME%\md\jlib.)

If JDK 1.6 is not already installed, install it.

If the JAVA_HOME environment variable does not already refer to the JDK 1.6 installation, define it accordingly. For example:

setenv JAVA_HOME /usr/local/packages/jdk16/

If the SPARQL service to support the SPARQL protocol is not set up, set it up as explained in Section 7.2.

After setting up the software environment, ensure that your Semantic Technologies environment can enable you to use the Jena Adapter to perform queries, as explained in Section 7.3.

Setting up a SPARQL endpoint using the Jena Adapter involves downloading Joseki, an open source HTTP engine that supports the SPARQL protocol and SPARQL queries. This section explains how to set up a SPARQL service using a servlet deployed in WebLogic Server. The number and complexity of the steps reflect the fact that Oracle is not permitted to bundle all the dependent third-party libraries in a .war or .ear file.

Download and Install Oracle WebLogic Server 11g Release 1 (10.3.1). For details, see http://www.oracle.com/technology/products/weblogic/ and http://www.oracle.com/technetwork/middleware/ias/downloads/wls-main-097127.html.

Ensure that you have Java 6 installed, because it is required by Joseki 3.4.4.

Download Joseki 3.4.4 (joseki-3.4.4.zip) from http://sourceforge.net/projects/joseki/files/Joseki-SPARQL/.

Unpack joseki-3.4.4.zip into a temporary directory. For example:

mkdir /tmp/joseki cp joseki-3.4.4.zip /tmp/joseki cd /tmp/joseki unzip joseki-3.4.4.zip

Ensure that you have downloaded and unzipped the Jena Adapter for Oracle Database, as explained in Section 7.1.

Create a directory named joseki.war at the same level as the jena_adapter directory, and go to it. For example:

mkdir /tmp/joseki.war cd /tmp/joseki.war

Copy necessary files into the directory created in the preceding step:

cp /tmp/jena_adapter/joseki/* /tmp/joseki.war cp -rf /tmp/joseki/Joseki-3.4.4/webapps/joseki/StyleSheets /tmp/joseki.war

Create directories and copy necessary files into them, as follows:

mkdir /tmp/joseki.war/WEB-INF cp /tmp/jena_adapter/web/* /tmp/joseki.war/WEB-INF mkdir /tmp/joseki.war/WEB-INF/lib cp /tmp/joseki/Joseki-3.4.4/lib/*.jar /tmp/joseki.war/WEB-INF/lib cp /tmp/jena_adapter/jar/*.jar /tmp/joseki.war/WEB-INF/lib ## ## Assume ORACLE_HOME points to the home directory of a Release 11.2.0.3 Oracle Database. ## cp $ORACLE_HOME/md/jlib/sdordf.jar /tmp/joseki.war/WEB-INF/lib cp $ORACLE_HOME/jdbc/lib/ojdbc6.jar /tmp/joseki.war/WEB-INF/lib

Using the WebLogic Server Administration console, create a J2EE data source named OracleSemDS. During the data source creation, you can specify a user and password for the database schema that contains the relevant semantic data against which SPARQL queries are to be executed.

If you need help in creating this data source, see Section 7.2.1, "Creating the Required Data Source Using WebLogic Server".

Go to the autodeploy directory of WebLogic Server and copy files, as follows. (For information about auto-deploying applications in development domains, see: http://docs.oracle.com/cd/E11035_01/wls100/deployment/autodeploy.html)

cd <domain_name>/autodeploy cp -rf /tmp/joseki.war <domain_name>/autodeploy

In the preceding example, <domain_name> is the name of a WebLogic Server domain.

Note that while you can run a WebLogic Server domain in two different modes, development and production, only development mode allows you use the auto-deployment feature.

Check the files and the directory structure to see if they reflect the following:

. |-- META-INF | `-- MANIFEST.MF |-- StyleSheets | `-- joseki.css |-- WEB-INF | |-- lib | | |-- arq-2.8.8-tests.jar | | |-- arq-2.8.8.jar | | |-- icu4j-3.4.4.jar | | |-- iri-0.8.jar | | |-- jena-2.6.4-tests.jar | | |-- jena-2.6.4.jar | | |-- jetty-6.1.25.jar | | |-- jetty-util-6.1.25.jar | | |-- joseki-3.4.4.jar | | |-- junit-4.5.jar | | |-- log4j-1.2.14.jar | | |-- lucene-core-2.3.1.jar | | |-- ojdbc6.jar | | |-- sdb-1.3.4.jar | | |-- sdordf.jar | | |-- sdordfclient.jar | | |-- servlet-api-2.5-20081211.jar | | |-- slf4j-api-1.5.8.jar | | |-- slf4j-log4j12-1.5.8.jar | | |-- stax-api-1.0.1.jar | | |-- tdb-0.8.10.jar | | |-- wstx-asl-3.2.9.jar | | `-- xercesImpl-2.7.1.jar | `-- web.xml |-- application.xml |-- index.html |-- joseki-config.ttl |-- update.html `-- xml-to-html.xsl

If you want to build a .war file from the /tmp/joseki.war directory (note that a .war file is required if you want to deploy Joseki to an OC4J container), enter the following commands:

cd /tmp/joseki.war jar cvf /tmp/joseki_app.war *

Start or restart WebLogic Server.

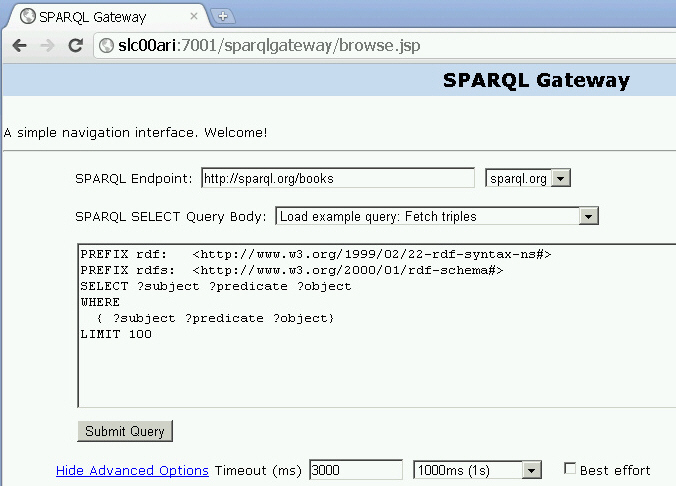

Verify your deployment by using your Web browser to connect to a URL in the following format (assume that the Web application is deployed at port 7001): http://<hostname>:7001/joseki

You should see a page titled Oracle SPARQL Service Endpoint using Joseki, and the first text box should contain an example SPARQL query.

Click Submit Query.

You should see a page titled Oracle SPARQL Endpoint Query Results. There may or may not be any results, depending on the underlying semantic model against which the query is executed.

By default, the joseki-config.ttl file contains an oracle:Dataset definition using a model named M_NAMED_GRAPHS. The following snippet shows the configuration. The oracle:allGraphs predicate denotes that the SPARQL service endpoint will serve queries using all graphs stored in the M_NAMED_GRAPHS model.

<#oracle> rdf:type oracle:Dataset;

joseki:poolSize 1 ; ## Number of concurrent connections allowed to this dataset.

oracle:connection

[ a oracle:OracleConnection ;

];

oracle:allGraphs [ oracle:firstModel "M_NAMED_GRAPHS" ] .

The M_NAMED_GRAPHS model will be created automatically (if it does not already exist) upon the first SPARQL query request. You can add a few example triples and quads to test the named graph functions; for example:

SQL> CONNECT username/password

SQL> INSERT INTO m_named_graphs_tpl VALUES(sdo_rdf_triple_s('m_named_graphs','<urn:s>','<urn:p>','<urn:o>'));

SQL> INSERT INTO m_named_graphs_tpl VALUES(sdo_rdf_triple_s('m_named_graphs:<urn:G1>','<urn:g1_s>','<urn:g1_p>','<urn:g1_o>'));

SQL> INSERT INTO m_named_graphs_tpl VALUES(sdo_rdf_triple_s('m_named_graphs:<urn:G2>','<urn:g2_s>','<urn:g2_p>','<urn:g2_o>'));

SQL> COMMIT;

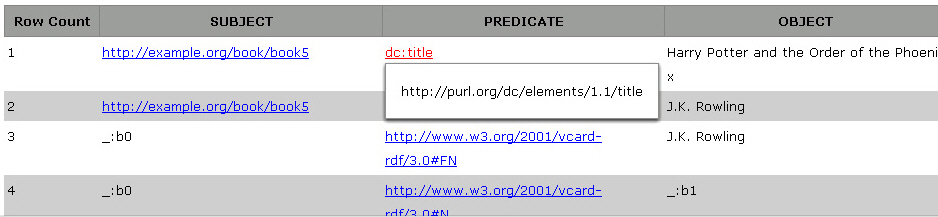

After inserting the rows, go to http://<hostname>:7001/joseki, type the following SPARQL query, and click Submit Query:

SELECT ?g ?s ?p ?o

WHERE

{ GRAPH ?g { ?s ?p ?o} }

The result should be an HTML table with four columns and two sets of result bindings.

The http://<hostname>:7001/joseki page also contains a JSON Output option. If this option is selected (enabled), the SPARQL query response is converted to JSON format.

If you need help creating the required J2EE data source using the WebLogic Server admin console, you can follow these steps:

Login to: http://<hostname>:7001/console

In the Domain Structure panel, click Services.

Click JDBC

Click Data Sources.

In the Summary of JDBC Data Sources panel, click New under the Data Sources table.

In the Create a New JDBC Data Source panel, enter or select the following values.

Name: OracleSemDS

JNDI Name: OracleSemDS

Database Type: Oracle

Database Driver: Oracle's Driver (Thin) Versions: 9.0.1,9.2.0,10,11

Click Next twice.

In the Connection Properties panel, enter the appropriate values for the Database Name, Host Name, Port, Database User Name (schema that contains semantic data), Password fields.

Click Next.

Select (check) the target server or servers to which you want to deploy this OracleSemDS data source.

Click Finish.

You should see a message that all changes have been activated and no restart is necessary.

By default, the SPARQL Service endpoint assumes that the queries are to be executed against a semantic model with a pre-set name. This semantic model is owned by the schema specified in the J2EE data source with JNDI name OracleSemDS. Note that you do not need to create this model explicitly using PL/SQL or Java; if the model does not exist in the network, it will be automatically created, along with the necessary application table and index.

Note:

Effective with the Jena Adapter release in November 2011, the application table index (<model_name>_idx) definition is changed to accommodate named graph data (quads).For existing models created by an older version of the Jena Adapter, you can migrate the application table index name and definition by using the static OracleUtils.migrateApplicationTableIndex(oracle, graph, dop) method in the oracle.spatial.rdf.client.jena package. (See the Javadoc for more information.) Note that the new index definition is critical to the performance of DML operations against the application table.

However, you must configure the SPARQL service by editing the joseki-config.ttl configuration file, which is in <domain_name>/autodeploy/joseki.war.

The supplied joseki-config.ttl file includes a section similar to the following for the Oracle data set:

#

## Datasets

#

[] ja:loadClass "oracle.spatial.rdf.client.jena.assembler.OracleAssemblerVocab" .

oracle:Dataset rdfs:subClassOf ja:RDFDataset .

<#oracle> rdf:type oracle:Dataset;

joseki:poolSize 1 ; ## Number of concurrent connections allowed to this dataset.

oracle:connection

[ a oracle:OracleConnection ;

];

oracle:defaultModel [ oracle:firstModel "TEST_MODEL" ] .

In this section of the file, you can:

Modify the joseki:poolSize value, which specifies the number of concurrent connections allowed to this Oracle data set (<#oracle> rdf:type oracle:Dataset;), which points to various RDF models in the database.

Modify the name (or the object value of oracle:firstModel predicate) of the defaultModel, to use a different semantic model for queries. You can also specify multiple models, and one or more rulebases for this defaultModel.

For example, the following specifies two models (named ABOX and TBOX) and an OWLPRIME rulebase for the default model. Note that models specified using the oracle:modelName predicate must exist; they will not be created automatically.

<#oracle> rdf:type oracle:Dataset;

joseki:poolSize 1 ; ## Number of concurrent connections allowed to this dataset.

oracle:connection

[ a oracle:OracleConnection ;

];

oracle:defaultModel [ oracle:firstModel "ABOX";

oracle:modelName "TBOX";

oracle:rulebaseName "OWLPRIME" ] .

Specify named graphs in the dataset. For example, you can create a named graph called <http://G1> based on two Oracle models and an entailment, as follows.

<#oracle> rdf:type oracle:Dataset;

joseki:poolSize 1 ; ## Number of concurrent connections allowed to this dataset.

oracle:connection

[ a oracle:OracleConnection ;

];

oracle:namedModel [ oracle:firstModel "ABOX";

oracle:modelName "TBOX";

oracle:rulebaseName "OWLPRIME";

oracle:namedModelURI <http://G1> ] .

The object of namedModel can take the same specifications as defaultModel, so virtual models are supported here as well (see also the next item).

Use a virtual model for queries by adding oracle:useVM "TRUE", as shown in the following example. Note that if the specified virtual model does not exist, it will automatically be created on demand.

<#oracle> rdf:type oracle:Dataset;

joseki:poolSize 1 ; ## Number of concurrent connections allowed to this dataset.

oracle:connection

[ a oracle:OracleConnection ;

];

oracle:defaultModel [ oracle:firstModel "ABOX";

oracle:modelName "TBOX";

oracle:rulebaseName "OWLPRIME";

oracle:useVM "TRUE"

] .

For more information, see Section 7.10.1, "Virtual Models Support".

Specify a virtual model as the default model to answer SPARQL queries by using the predicate oracle:virtualModelName, as shown in the following example with a virtual model named TRIPLE_DATA_VM_0:

oracle:defaultModel [ oracle:virtualModelName "TRIPLE_DATA_VM_0" ] .

If the underlying data consists of quads, you can use oracle:virtualModelName with oracle:allGraphs. The presence of oracle:allGraphs causes an instantiation of DatasetGraphOracleSem objects to answer named graph queries. An example is as follows:

oracle:allGraphs [ oracle:virtualModelName "QUAD_DATA_VM_0" ] .

Note that when a virtual model name is specified as the default graph, the endpoint can serve only query requests; SPARQL Update operations are not supported.

Set the queryOptions and inferenceMaintenance properties to change the query behavior and inference update mode. (See the Javadoc for information about QueryOptions and InferenceMaintenanceMode.)

By default, QueryOptions.ALLOW_QUERY_INVALID_AND_DUP and InferenceMaintenanceMode.NO_UPDATE are set, for maximum query flexibility and efficiency.

For every database connection created or used by the Jena Adapter, a client identifier is associated with the connection. The client identifier can be helpful, especially in a Real Application Cluster (Oracle RAC) environment, for isolating Jena Adapter-related activities from other database activities when you are doing performance analysis and tuning.

By default, the client identifier assigned is JenaAdapter. However, you can specify a different value by setting the Java VM clientIdentifier property using the following format:

-Doracle.spatial.rdf.client.jena.clientIdentifier=<identificationString>

To start the tracing of only Jena Adapter-related activities on the database side, you can use the DBMS_MONITOR.CLIENT_ID_TRACE_ENABLE procedure. For example:

SQL> EXECUTE DBMS_MONITOR.CLIENT_ID_TRACE_ENABLE('JenaAdapter', true, true);

By default, the Jena Adapter creates the application tables and any staging tables (the latter used for bulk loading, as explained in Section 7.11) using basic table compression with the following syntax:

CREATE TABLE .... (... column definitions ...) ... compress;

However, if you are licensed to use the Oracle Advanced Compression option no the database, you can set the following JVM property to turn on OLTP compression, which compresses data during all DML operations against the underlying application tables and staging tables:

-Doracle.spatial.rdf.client.jena.advancedCompression="compress for oltp"

Because some applications need to be able to terminate long-running SPARQL queries, an abort framework has been introduced with the Jena Adapter and the Joseki setup. Basically, for queries that may take a long time to run, you must stamp each with a unique query ID (qid) value.

For example, the following SPARQL query selects out the subject of all triples. A query ID (qid) is set so that this query can be terminated upon request.

PREFIX ORACLE_SEM_FS_NS: <http://example.com/semtech#qid=8761>

SELECT ?subject WHERE {?subject ?property ?object }

The qid attribute value is of long integer type. You can choose a value for the qid for a particular query based on your own application needs.

To terminate a SPARQL query that has been submitted with a qid value, applications can send an abort request to a servlet in the following format and specify a matching QID value

http://<hostname>:7001/joseki/querymgt?abortqid=8761

For any non-ASCII characters in the lexical representation of RDF resources, \uHHHH N-Triples encoding is used when the characters are inserted into the Oracle database. (For details about N-Triples encoding, see http://www.w3.org/TR/rdf-testcases/#ntrip_grammar.) Encoding of the constant resources in a SPARQL query is handled in a similar fashion.

Using \uHHHH N-Triples encoding enables support for international characters, such as a mix of Norwegian and Swedish characters, in the Oracle database even if a supported Unicode character set is not being used.

To use the Jena Adapter to perform queries, you can connect as any user (with suitable privileges) and use any models in the semantic network. If your Semantic Technologies environment already meets the requirements, you can go directly to compiling and running Java code that uses the Jena Adapter. If your Semantic Technologies environment is not yet set up to be able to use the Jena Adapter, you can perform actions similar to the following example steps:

Connect as SYS with the SYSDBA role:

sqlplus sys/<password-for-sys> as sysdba

Create a tablespace for the system tables. For example:

CREATE TABLESPACE rdf_users datafile 'rdf_users01.dbf'

size 128M reuse autoextend on next 64M

maxsize unlimited segment space management auto;

Create the semantic network. For example:

EXECUTE sem_apis.create_sem_network('RDF_USERS');

Create a database user (for connecting to the database to use the semantic network and the Jena Adapter). For example:

CREATE USER rdfusr IDENTIFIED BY <password-for-udfusr>

DEFAULT TABLESPACE rdf_users;

Grant the necessary privileges to this database user. For example:

GRANT connect, resource TO rdfusr;

To use the Jena Adapter with your own semantic data, perform the appropriate steps to store data, create a model, and create database indexes, as explained in Section 1.10, "Quick Start for Using Semantic Data". Then perform queries by compiling and running Java code; see Section 7.15 for information about example queries.

To use the Jena Adapter with supplied example data, see Section 7.15.

There are two ways to query semantic data stored in Oracle Database: SEM_MATCH-based SQL statements and SPARQL queries through the Jena Adapter. Queries using each approach are similar in appearance, but there are important behavioral differences. To ensure consistent application behavior, you must understand the differences and use care when dealing with query results coming from SEM_MATCH queries and SPARQL queries.

The following simple examples show the two approaches.

Query 1 (SEM_MATCH-based)

select s, p, o

from table(sem_match('{?s ?p ?o}', sem_models('Test_Model'), ....))

Query 2 (SPARQL query through the Jena Adapter)

select ?s ?p ?o

where {?s ?p ?o}

These two queries perform the same kind of functions; however, there are some important differences. Query 1 (SEM_MATCH-based):

Reads all triples out of Test_Model.

Does not differentiate among URI, bNode, plain literals, and typed literals, and it does not handle long literals.

Does not unescape certain characters (such as '\n').

Query 2 (SPARQL query executed through the Jena Adapter) also reads all triples out of Test_Model (assume it executed a call to ModelOracleSem referring to the same underlying Test_Model). However, Query 2:

Reads out additional columns (as opposed to just the s, p, and o columns with the SEM_MATCH table function), to differentiate URI, bNodes, plain literals, typed literals, and long literals. This is to ensure proper creation of Jena Node objects.

Unescapes those characters that are escaped when stored in Oracle Database

Blank node handling is another difference between the two approaches:

In a SEM_MATCH-based query, blank nodes are always treated as constants.

In a SPARQL query, a blank node that is not wrapped inside < and > is treated as a variable when the query is executed through the Jena Adapter. This matches the SPARQL standard semantics. However, a blank node that is wrapped inside < and > is treated as a constant when the query is executed, and the Jena Adapter adds a proper prefix to the blank node label as required by the underlying data modeling.

The maximum length for the name of a semantic model created using the Jena Adapter API is 22 characters.

This section describes some performance-related features of the Jena Adapter that can enhance SPARQL query processing. These features are performed automatically by default.

This section assumes that you are familiar with SPARQL, including the CONSTRUCT feature and property paths.

SPARQL queries involving DISTINCT, OPTIONAL, FILTER, UNION, ORDER BY, and LIMIT are converted to a single Oracle SEM_MATCH table function. If a query cannot be converted directly to SEM_MATCH because it uses SPARQL features not supported by SEM_MATCH (for example, CONSTRUCT), the Jena Adapter employs a hybrid approach and tries to execute the largest portion of the query using a single SEM_MATCH function while executing the rest using the Jena ARQ query engine.

For example, the following SPARQL query is directly translated to a single SEM_MATCH table function:

PREFIX dc: <http://purl.org/dc/elements/1.1/>

PREFIX rdf: <http://www.w3.org/1999/02/22-rdf-syntax-ns#>

PREFIX foaf: <http://xmlns.com/foaf/0.1/>

SELECT ?person ?name

WHERE {

{?alice foaf:knows ?person . }

UNION {

?person ?p ?name. OPTIONAL { ?person ?x ?name1 }

}

}

However, the following example query is not directly translatable to a single SEM_MATCH table function because of the CONSTRUCT keyword:

PREFIX vcard: <http://www.w3.org/2001/vcard-rdf/3.0#>

CONSTRUCT { <http://example.org/person#Alice> vcard:FN ?obj }

WHERE { { ?x <http://pred/a> ?obj.}

UNION

{ ?x <http://pred/b> ?obj.} }

In this case, the Jena Adapter converts the inner UNION query into a single SEM_MATCH table function, and then passes on the result set to the Jena ARQ query engine for further evaluation.

As defined in Jena, a property path is a possible route through an RDF graph between two graph nodes. Property paths are an extension of SPARQL and are more expressive than basic graph pattern queries, because regular expressions can be used over properties for pattern matching RDF graphs. For more information about property paths, see the documentation for the Jena ARQ query engine.

The Jena Adapter supports all Jena property path types through the integration with the Jena ARQ query engine, but it converts some common path types directly to native SQL hierarchical queries (not based on SEM_MATCH) to improve performance. The following types of property paths are directly converted to SQL by the Jena Adapter when dealing with triple data:

Predicate alternatives: (p1 | p2 | … | pn) where pi is a property URI

Predicate sequences: (p1 / p2 / … / pn) where pi is a property URI

Reverse paths : ( ^ p ) where p is a predicate URI

Complex paths: p+, p*, p{0, n} where p could be an alternative, sequence, reverse path, or property URI

Path expressions that cannot be captured in this grammar are not translated directly to SQL by the Jena Adapter, and they are answered using the Jena query engine.

The following example contains a code snippet using a property path expression with path sequences:

String m = "PROP_PATH";

ModelOracleSem model = ModelOracleSem.createOracleSemModel(oracle, m);

GraphOracleSem graph = new GraphOracleSem(oracle, m);

// populate the RDF Graph

graph.add(Triple.create(Node.createURI("http://a"),

Node.createURI("http://p1"),

Node.createURI("http://b")));

graph.add(Triple.create(Node.createURI("http://b"),

Node.createURI("http://p2"),

Node.createURI("http://c")));

graph.add(Triple.create(Node.createURI("http://c"),

Node.createURI("http://p5"),

Node.createURI("http://d")));

String query =

" SELECT ?s " +

" WHERE {?s (<http://p1>/<http://p2>/<http://p5>)+ <http://d>.}";

QueryExecution qexec =

QueryExecutionFactory.create(QueryFactory.create(query,

Syntax.syntaxARQ), model);

try {

ResultSet results = qexec.execSelect();

ResultSetFormatter.out(System.out, results);

}

finally {

if (qexec != null)

qexec.close();

}

OracleUtils.dropSemanticModel(oracle, m);

model.close();

The Jena Adapter allows you to pass in hints and additional query options. It implements these capabilities by overloading the SPARQL namespace prefix syntax by using Oracle-specific namespaces that contain query options. The namespaces are in the form PREFIX ORACLE_SEM_xx_NS, where xx indicates the type of feature (such as HT for hint or AP for additional predicate)

SQL hints can be passed to a SEM_MATCH query including a line in the following form:

PREFIX ORACLE_SEM_HT_NS: <http://oracle.com/semtech#hint>

Where hint can be any hint supported by SEM_MATCH. For example:

PREFIX ORACLE_SEM_HT_NS: <http://oracle.com/semtech#leading(t0,t1)>

SELECT ?book ?title ?isbn

WHERE { ?book <http://title> ?title. ?book <http://ISBN> ?isbn }

In this example, t0,t1 refers to the first and second patterns in the query.

Note the slight difference in specifying hints when compared to SEM_MATCH. Due to restrictions of namespace value syntax, a comma (,) must be used to separate t0 and t1 (or other hint components) instead of a space.

For more information about using SQL hints, see Section 1.6, "Using the SEM_MATCH Table Function to Query Semantic Data", specifically the material about the HINT0 keyword in the options attribute.

In Oracle Database, using bind variables can reduce query parsing time and increase query efficiency and concurrency. Bind variable support in SPARQL queries is provided through namespace pragma specifications similar to ORACLE_SEM_FS_NS.

Consider a case where an application runs two SPARQL queries, where the second (Query 2) depends on the partial or complete results of the first (Query 1). Some approaches that do not involve bind variables include:

Iterating through results of Query 1 and generating a set of queries. (However, this approach requires as many queries as the number of results of Query 1.)

Constructing a SPARQL filter expression based on results of Query 1.

Treating Query 1 as a subquery.

Another approach in this case is to use bind variables, as in the following sample scenario:

Query 1: SELECT ?x WHERE { ... <some complex query> ... }; Query 2: SELECT ?subject ?x WHERE {?subject <urn:related> ?x .};

The following example shows Query 2 with the syntax for using bind variables with the Jena Adapter:

PREFIX ORACLE_SEM_FS_NS: <http://oracle.com/semtech#no_fall_back,s2s> PREFIX ORACLE_SEM_UEAP_NS: <http://oracle.com/semtech#x$RDFVID%20in(?,?,?)> PREFIX ORACLE_SEM_UEPJ_NS: <http://oracle.com/semtech#x$RDFVID> PREFIX ORACLE_SEM_UEBV_NS: <http://oracle.com/semtech#1,2,3> SELECT ?subject ?x WHERE { ?subject <urn:related> ?x };

This syntax includes using the following namespaces:

ORACLE_SEM_UEAP_NS is like ORACLE_SEM_AP_NS, but the value portion of ORACLE_SEM_UEAP_NS is URL Encoded. Before the value portion is used, it must be URL decoded, and then it will be treated as an additional predicate to the SPARQL query.

In this example, after URL decoding, the value portion (following the # character) of this ORACLE_SEM_UEAP_NS prefix becomes "x$RDFVID in(?,?,?)". The three question marks imply a binding to three values coming from Query 1.

ORACLE_SEM_UEPJ_NS specifies the additional projections involved. In this case, because ORACLE_SEM_UEAP_NS references the x$RDFVID column, which does not appear in the SELECT clause of the query, it must be specified. Multiple projections are separated by commas.

ORACLE_SEM_UEBV_NS specifies the list of bind values that are URL encoded first, and then concatenated and delimited by commas.

Conceptually, the preceding example query is equivalent to the following non-SPARQL syntax query, in which 1, 2, and 3 are treated as bind values:

SELECT ?subject ?x

WHERE {

?subject <urn:related> ?x

}

AND ?x$RDFVID in (1,2,3);

In the preceding SPARQL example of Query 2, the three integers 1, 2, and 3 come from Query 1. You can use the oext:build-uri-for-id function to generate such internal integer IDs for RDF resources. The following example gets the internal integer IDs from Query 1:

PREFIX oext: <http://oracle.com/semtech/jena-adaptor/ext/function#> SELECT ?x (oext:build-uri-for-id(?x) as ?xid) WHERE { ... <some complex query> ... };

The values of ?xid have the form of <rdfvid:integer-value>. The application can strip out the angle brackets and the "rdfvid:" strings to get the integer values and pass them to Query 2.

Consider another case, with a single query structure but potentially many different constants. For example, the following SPARQL query finds the hobby for each user who has a hobby and who logs in to an application. Obviously, different users will provide different <uri> values to this SPARQL query, because users of the application are represented using different URIs.

SELECT ?hobby

WHERE { <uri> <urn:hasHobby> ?hobby };

One approach, which would not use bind variables, is to generate a different SPARQL query for each different <uri> value. For example, user Jane Doe might trigger the execution of the following SPARQL query:

SELECT ?hobby WHERE {

<http://www.example.com/Jane_Doe> <urn:hasHobby> ?hobby };

However, another approach is to use bind variables, as in the following example specifying user Jane Doe:

PREFIX ORACLE_SEM_FS_NS: <http://oracle.com/semtech#no_fall_back,s2s>

PREFIX ORACLE_SEM_UEAP_NS: <http://oracle.com/semtech#subject$RDFVID%20in(ORACLE_ORARDF_RES2VID(?))>

PREFIX ORACLE_SEM_UEPJ_NS: <http://oracle.com/semtech#subject$RDFVID>

PREFIX ORACLE_SEM_UEBV_NS: <http://oracle.com/semtech#http%3a%2f%2fwww.example.com%2fJohn_Doe>

SELECT ?subject ?hobby

WHERE {

?subject <urn:hasHobby> ?hobby

};

Conceptually, the preceding example query is equivalent to the following non-SPARQL syntax query, in which http://www.example.com/Jane_Doe is treated as a bind variable:

SELECT ?subject ?hobby

WHERE {

?subject <urn:hasHobby> ?hobby

}

AND ?subject$RDFVID in (ORACLE_ORARDF_RES2VID('http://www.example.com/Jane_Doe'));

In this example, ORACLE_ORARDF_RES2VID is a function that translates URIs and literals into their internal integer ID representation. This function is created automatically when the Jena Adapter is used to connect to an Oracle database.

The SEM_MATCH filter attribute can specify additional selection criteria as a string in the form of a WHERE clause without the WHERE keyword. Additional WHERE clause predicates can be passed to a SEM_MATCH query including a line in the following form:

PREFIX ORACLE_SEM_AP_NS: <http://oracle.com/semtech#pred>

Where pred reflects the WHERE clause content to be appended to the query. For example:

PREFIX rdfs: <http://www.w3.org/2000/01/rdf-schema#>

PREFIX ORACLE_SEM_AP_NS:<http://www.oracle.com/semtech#label$RDFLANG='fr'>

SELECT DISTINCT ?inst ?label

WHERE { ?inst a <http://someCLass>. ?inst rdfs:label ?label . }

ORDER BY (?label) LIMIT 20

In this example, a restriction is added to the query that the language type of the label variable must be 'fr'.

Additional query options can be passed to a SEM_MATCH query including a line in the following form:

PREFIX ORACLE_SEM_FS_NS: <http://oracle.com/semtech#option>

Where option reflects a query option (or multiple query options delimited by commas) to be appended to the query. For example:

PREFIX ORACLE_SEM_FS_NS:

<http://oracle.com/semtech#timeout=3,dop=4,INF_ONLY,ORDERED,ALLOW_DUP=T>

SELECT * WHERE {?subject ?property ?object }

The following query options are supported:

ALLOW_DUP=t chooses a faster way to query multiple semantic models, although duplicate results may occur.

BEST_EFFORT_QUERY=t, when used with the TIMEOUT=n option, returns all matches found in n seconds for the SPARQL query.

DEGREE=n specifies, at the statement level, the degree of parallelism (n) for the query. With multi-core or multi-CPU processors, experimenting with different DOP values (such as 4 or 8) may improve performance.

Contrast DEGREE with DOP, which specifies parallelism at the session level. DEGREE is recommended over DOP for use with the Jena Adapter, because DEGREE involves less processing overhead.

DOP=n specifies, at the session level, the degree of parallelism (n) for the query. With multi-core or multi-CPU processors, experimenting with different DOP values (such as 4 or 8) may improve performance.

INF_ONLY causes only the inferred model to be queried.

JENA_EXECUTOR disables the compilation of SPARQL queries to SEM_MATCH (or native SQL); instead, the Jena native query executor will be used.

JOIN=n specifies how results from a SPARQL SERVICE call to a federated query can be joined with other parts of the query. For information about federated queries and the JOIN option, see Section 7.6.4.1.

NO_FALL_BACK causes the underlying query execution engine not to fall back on the Jena execution mechanism if a SQL exception occurs.

ODS=n specifies, at the statement level, the level of dynamic sampling. (For an explanation of dynamic sampling, see the section about estimating statistics with dynamic sampling in Oracle Database Performance Tuning Guide.) Valid values for n are 1 through 10. For example, you could try ODS=3 for complex queries.

ORDERED is translated to a LEADING SQL hint for the query triple pattern joins, while performing the necessary RDF_VALUE$ joins last.

PLAIN_SQL_OPT=F disables the native compilation of queries directly to SQL.

QID=n specifies a query ID number; this feature can be used to cancel the query if it is not responding.

RESULT_CACHE uses the Oracle RESULT_CACHE directive for the query.

REWRITE=F disables ODCI_Table_Rewrite for the SEM_MATCH table function.

SKIP_CLOB=T causes CLOB values not to be returned for the query.

S2S (SPARQL to pure SQL) causes the underlying SEM_MATCH-based query or queries generated based on the SPARQL query to be further converted into SQL queries without using the SEM_MATCH table function. The resulting SQL queries are executed by the Oracle cost-based optimizer, and the results are processed by the Jena Adapter before being passed on to the client. For more information about the S2S option, including benefits and usage information, see Section 7.6.4.2.

S2S is enabled by default for all SPARQL queries. If you want to disable S2S, set the following JVM system property:

-Doracle.spatial.rdf.client.jena.defaultS2S=false

TIMEOUT=n (query timeout) specifies the number of seconds (n) that the query will run until it is terminated. The underlying SQL generated from a SPARQL query can return many matches and can use features like subqueries and assignments, all of which can take considerable time. The TIMEOUT and BEST_EFFORT_QUERY=t options can be used to prevent what you consider excessive processing time for the query.

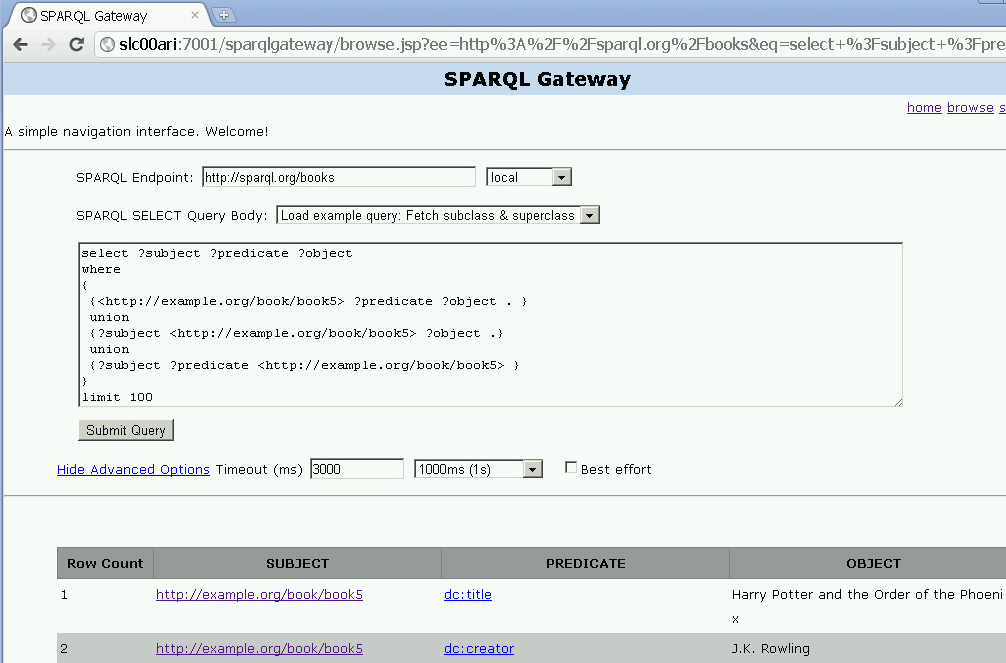

A SPARQL federated query, as described in W3C documents, is a query "over distributed data" that entails "querying one source and using the acquired information to constrain queries of the next source." For more information, see SPARQL 1.1 Federation Extensions (http://www.w3.org/2009/sparql/docs/fed/service).

You can use the JOIN option (described in Section 7.6.4) and the SERVICE keyword in a federated query that uses the Jena Adapter. For example, assume the following query:

SELECT ?s ?s1 ?o

WHERE { ?s1 ?p1 ?s .

{

SERVICE <http://sparql.org/books> { ?s ?p ?o }

}

}

If the local query portion (?s1 ?p1 ?s,) is very selective, you can specify join=2, as shown in the following query:

PREFIX ORACLE_SEM_FS_NS: <http://oracle.com/semtech#join=2>

SELECT ?s ?s1 ?o

WHERE { ?s1 ?p1 ?s .

{

SERVICE <http://sparql.org/books> { ?s ?p ?o }

}

}

In this case, the local query portion (?s1 ?p1 ?s,) is executed locally against the Oracle database. Each binding of ?s from the results is then pushed into the SERVICE part (remote query portion), and a call is made to the service endpoint specified. Conceptually, this approach is somewhat like nested loop join.

If the remote query portion (?s ?s1 ?o) is very selective, you can specify join=3, as shown in the following query, so that the remote portion is executed first and results are used to drive the execution of local portion:

PREFIX ORACLE_SEM_FS_NS: <http://oracle.com/semtech#join=3>

SELECT ?s ?s1 ?o

WHERE { ?s1 ?p1 ?s .

{

SERVICE <http://sparql.org/books> { ?s ?p ?o }

}

}

In this case, a single call is made to the remote service endpoint and each binding of ?s triggers a local query. As with join=2, this approach is conceptually a nested loop based join, but the difference is that the order is switched.

If neither the local query portion nor the remote query portion is very selective, then we can choose join=1, as shown in the following query:

PREFIX ORACLE_SEM_FS_NS: <http://oracle.com/semtech#join=1>

SELECT ?s ?s1 ?o

WHERE { ?s1 ?p1 ?s .

{

SERVICE <http://sparql.org/books> { ?s ?p ?o }

}

}

In this case, the remote query portion and the local portion are executed independently, and the results are joined together by Jena. Conceptually, this approach is somewhat like a hash join.

For debugging or tracing federated queries, you can use the HTTP Analyzer in Oracle JDeveloper to see the underlying SERVICE calls.

The S2S option, described in Section 7.6.4, provides the following potential benefits:

It works well with the RESULT_CACHE option to improve query performance. Using the S2S and RESULT_CACHE options is especially helpful for queries that are executed frequently.

It reduces the parsing time of the SEM_MATCH table function, which can be helpful for applications that involve many dynamically generated SPARQL queries.

It eliminates the limit of 4000 bytes for the query body (the first parameter of the SEM_MATCH table function), which means that longer, more complex queries are supported.

The S2S option causes an internal in-memory cache to be used for translated SQL query statements. The default size of this internal cache is 1024 (that is, 1024 SQL queries); however, you can adjust the size by using the following Java VM property:

-Doracle.spatial.rdf.client.jena.queryCacheSize=<size>

When semantic data is stored, all of the resource values are hashed into IDs, which are stored in the triples table. The mappings from value IDs to full resource values are stored in the MDSYS.RDF_VALUE$ table. At query time, for each selected variable, Oracle Database must perform a join with the RDF_VALUE$ table to retrieve the resource.

However, to reduce the number of joins, you can use the midtier cache option, which causes an in-memory cache on the middle tier to be used for storing mappings between value IDs and resource values. To use this feature, include the following PREFIX pragma in the SPARQL query:

PREFIX ORACLE_SEM_FS_NS: <http://oracle.com/semtech#midtier_cache>

To control the maximum size (in bytes) of the in-memory cache, use the oracle.spatial.rdf.client.jena.cacheMaxSize system property. The default cache maximum size is 1GB.

Note that midtier resource caching is most effective for queries using ORDER BY or DISTINCT (or both) constructs, or queries with multiple projection variables. Midtier cache can be combined with the other options specified in Section 7.6.4.

If you want to pre-populate the cache with all of the resources in a model, use the GraphOracleSem.populateCache or DatasetGraphOracleSem.populateCache method. Both methods take a parameter specifying the number of threads used to build the internal midtier cache. Running either method in parallel can significantly increase the cache building performance on a machine with multiple CPUs (cores).

SPARQL queries through the Jena Adapter can use the following kinds of functions:

Functions in the function library of the Jena ARQ query engine

Native Oracle Database functions for projected variables

User-defined functions

SPARQL queries through the Jena Adapter can use functions in the function library of the Jena ARQ query engine. These queries are executed in the middle tier.

The following examples use the upper-case and namespace functions. In these examples, the prefix fn is <http://www.w3.org/2005/xpath-functions#> and the prefix afn is <http://jena.hpl.hp.com/ARQ/function#>.

PREFIX fn: <http://www.w3.org/2005/xpath-functions#>

PREFIX afn: <http://jena.hpl.hp.com/ARQ/function#>

SELECT (fn:upper-case(?object) as ?object1)

WHERE { ?subject dc:title ?object }

PREFIX fn: <http://www.w3.org/2005/xpath-functions#>

PREFIX afn: <http://jena.hpl.hp.com/ARQ/function#>

SELECT ?subject (afn:namespace(?object) as ?object1)

WHERE { ?subject <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> ?object }

SPARQL queries through the Jena Adapter can use native Oracle Database functions for projected variables. These queries and the functions are executed inside the database. Note that the functions described in this section should not be used together with ARQ functions (described in Section 7.7.1).

This section lists the supported native functions and provides some examples. In the examples, the prefix oext is <http://oracle.com/semtech/jena-adaptor/ext/function#>.

Note:

In the preceding URL, note the spellingjena-adaptor, which is retained for compatibility with existing applications and which must be used in queries. The adapter spelling is used in regular text, to follow Oracle documentation style guidelines.oext:upper-literal converts literal values (except for long literals) to uppercase. For example:

PREFIX oext: <http://oracle.com/semtech/jena-adaptor/ext/function#>

SELECT (oext:upper-literal(?object) as ?object1)

WHERE { ?subject dc:title ?object }

oext:lower-literal converts literal values (except for long literals) to lowercase. For example:

PREFIX oext: <http://oracle.com/semtech/jena-adaptor/ext/function#>

SELECT (oext:lower-literal(?object) as ?object1)

WHERE { ?subject dc:title ?object }

oext:build-uri-for-id converts the value ID of a URI, bNode, or literal into a URI form. For example:

PREFIX oext: <http://oracle.com/semtech/jena-adaptor/ext/function#>

SELECT (oext:build-uri-for-id(?object) as ?object1)

WHERE { ?subject dc:title ?object }

An example of the output might be: <rdfvid:1716368199350136353>

One use of this function is to allow Java applications to maintain in memory a mapping of those value IDs to the lexical form of URIs, bNodes, or literals. The MDSYS.RDF_VALUE$ table provides such a mapping in Oracle Database.

For a given variable ?var, if only oext:build-uri-for-id(?var) is projected, the query performance is likely to be faster because fewer internal table join operations are needed to answer the query.

oext:literal-strlen returns the length of literal values (except for long literals). For example:

PREFIX oext: <http://oracle.com/semtech/jena-adaptor/ext/function#>

SELECT (oext:literal-strlen(?object) as ?objlen)

WHERE { ?subject dc:title ?object }

SPARQL queries through the Jena Adapter can use user-defined functions that are stored in the database.

In the following example, assume that you want to define a string length function (my_strlen) that handles long literals (CLOB) as well as short literals. On the SPARQL query side, this function can be referenced under the namespace of ouext, which is http://oracle.com/semtech/jena-adaptor/ext/user-def-function#.

PREFIX ouext: <http://oracle.com/semtech/jena-adaptor/ext/user-def-function#>

SELECT ?subject ?object (ouext:my_strlen(?object) as ?obj1)

WHERE { ?subject dc:title ?object }

Inside the database, functions including my_strlen, my_strlen_cl, my_strlen_la, my_strlen_lt, and my_strlen_vt are defined to implement this capability. Conceptually, the return values of these functions are mapped as shown in Table 7-1.

Table 7-1 Functions and Return Values for my_strlen Example

| Function Name | Return Value |

|---|---|

|

my_strlen |

<VAR> |

|

my_strlen_cl |

<VAR>$RDFCLOB |

|

my_strlen_la |

<VAR>$RDFLANG |

|

my_strlen_lt |

<VAR>$RDFLTYP |

|

my_strlen_vt |

<VAR>$RDFVTYP |

A set of functions (five in all) is used to implement a user-defined function that can be referenced from SPARQL, because this aligns with the internal representation of an RDF resource (in MDSYS.RDF_VALUE$). There are five major columns describing an RDF resource in terms of its value, language, literal type, long value, and value type, and these five columns can be selected out using SEM_MATCH. In this context, a user-defined function simply converts one RDF resource that is represented by five columns to another RDF resource.

These functions are defined as follows:

create or replace function my_strlen(rdfvtyp in varchar2, rdfltyp in varchar2, rdflang in varchar2, rdfclob in clob, value in varchar2 ) return varchar2 as ret_val varchar2(4000); begin -- value if (rdfvtyp = 'LIT') then if (rdfclob is null) then return length(value); else return dbms_lob.getlength(rdfclob); end if; else -- Assign -1 for non-literal values so that application can -- easily differentiate return '-1'; end if; end; / create or replace function my_strlen_cl(rdfvtyp in varchar2, rdfltyp in varchar2, rdflang in varchar2, rdfclob in clob, value in varchar2 ) return clob as begin return null; end; / create or replace function my_strlen_la(rdfvtyp in varchar2, rdfltyp in varchar2, rdflang in varchar2, rdfclob in clob, value in varchar2 ) return varchar2 as begin return null; end; / create or replace function my_strlen_lt(rdfvtyp in varchar2, rdfltyp in varchar2, rdflang in varchar2, rdfclob in clob, value in varchar2 ) return varchar2 as ret_val varchar2(4000); begin -- literal type return 'http://www.w3.org/2001/XMLSchema#integer'; end; / create or replace function my_strlen_vt(rdfvtyp in varchar2, rdfltyp in varchar2, rdflang in varchar2, rdfclob in clob, value in varchar2 ) return varchar2 as ret_val varchar2(3); begin return 'LIT'; end; /

User-defined functions can also accept a parameter of VARCHAR2 type. The following five functions together define a my_shorten_str function that accepts an integer (in VARCHAR2 form) for the substring length and returns the substring. (The substring in this example is 12 characters, and it must not be greater than 4000 bytes.)

-- SPARQL query that returns the first 12 characters of literal values.

--

PREFIX ouext: <http://oracle.com/semtech/jena-adaptor/ext/user-def-function#>

SELECT (ouext:my_shorten_str(?object, "12") as ?obj1) ?subject

WHERE { ?subject dc:title ?object }

create or replace function my_shorten_str(rdfvtyp in varchar2,

rdfltyp in varchar2,

rdflang in varchar2,

rdfclob in clob,

value in varchar2,

arg in varchar2

) return varchar2

as

ret_val varchar2(4000);

begin

-- value

if (rdfvtyp = 'LIT') then

if (rdfclob is null) then

return substr(value, 1, to_number(arg));

else

return dbms_lob.substr(rdfclob, to_number(arg), 1);

end if;

else

return null;

end if;

end;

/

create or replace function my_shorten_str_cl(rdfvtyp in varchar2,

rdfltyp in varchar2,

rdflang in varchar2,

rdfclob in clob,

value in varchar2,

arg in varchar2

) return clob

as

ret_val clob;

begin

-- lob

return null;

end;

/

create or replace function my_shorten_str_la(rdfvtyp in varchar2,

rdfltyp in varchar2,

rdflang in varchar2,

rdfclob in clob,

value in varchar2,

arg in varchar2

) return varchar2

as

ret_val varchar2(4000);

begin

-- lang

if (rdfvtyp = 'LIT') then

return rdflang;

else

return null;

end if;

end;

/

create or replace function my_shorten_str_lt(rdfvtyp in varchar2,

rdfltyp in varchar2,

rdflang in varchar2,

rdfclob in clob,

value in varchar2,

arg in varchar2

) return varchar2

as

ret_val varchar2(4000);

begin

-- literal type

ret_val := rdfltyp;

return ret_val;

end;

/

create or replace function my_shorten_str_vt(rdfvtyp in varchar2,

rdfltyp in varchar2,

rdflang in varchar2,

rdfclob in clob,

value in varchar2,

arg in varchar2

) return varchar2

as

ret_val varchar2(3);

begin

return 'LIT';

end;

/

The Jena Adapter supports SPARQL Update (http://www.w3.org/TR/sparql11-update/), also referred to as SPARUL. The primary programming APIs involve the Jena class UpdateAction (in package com.hp.hpl.jena.update) and the Jena Adapter classes GraphOracleSem and DatasetGraphOracleSem. Example 7-1 shows a SPARQL Update operation removes all triples in named graph <http://example/graph> from the relevant model stored in the database.

Example 7-1 Simple SPARQL Update

GraphOracleSem graphOracleSem = .... ; DatasetGraphOracleSem dsgos = DatasetGraphOracleSem.createFrom(graphOracleSem); // SPARQL Update operation String szUpdateAction = "DROP GRAPH <http://example/graph>"; // Execute the Update against a DatasetGraph instance (can be a Jena Model as well) UpdateAction.parseExecute(szUpdateAction, dsgos);

Note that Oracle Database does not keep any information about an empty named graph. This implies if you invoke CREATE GRAPH <graph_name> without adding any triples into this graph, then no additional rows in the application table or the underlying RDF_LINK$ table will be created. To an Oracle database, you can safely skip the CREATE GRAPH step, as is the case in Example 7-1.

Example 7-2 shows a SPARQL Update operation (from ARQ 2.8.8) involving multiple insert and delete operations.

Example 7-2 SPARQL Update with Insert and Delete Operations

PREFIX : <http://example/>

CREATE GRAPH <http://example/graph> ;

INSERT DATA { :r :p 123 } ;

INSERT DATA { :r :p 1066 } ;

DELETE DATA { :r :p 1066 } ;

INSERT DATA {

GRAPH <http://example/graph> { :r :p 123 . :r :p 1066 }

} ;

DELETE DATA {

GRAPH <http://example/graph> { :r :p 123 }

}

After running the update operation in Example 7-2 against an empty DatasetGraphOracleSem, running the SPARQL query SELECT ?s ?p ?o WHERE {?s ?p ?o} generates the following response:

----------------------------------------------------------------------------------------------- | s | p | o | =============================================================================================== | <http://example/r> | <http://example/p> | "123"^^<http://www.w3.org/2001/XMLSchema#decimal> | -----------------------------------------------------------------------------------------------

Using the same data, running the SPARQL query SELECT ?g ?s ?p ?o where {GRAPH ?g {?s ?p ?o}} generates the following response:

------------------------------------------------------------------------------------------------------------------------- | g | s | p | o | ========================================================================================================================= | <http://example/graph> | <http://example/r> | <http://example/p> | "1066"^^<http://www.w3.org/2001/XMLSchema#decimal> | -------------------------------------------------------------------------------------------------------------------------

In addition to using the Java API for SPARQL Update operations, you can configure Joseki to accept SPARQL Update operations by removing the comment (##) characters at the start of the following lines in the joseki-config.ttl file.

## <#serviceUpdate> ## rdf:type joseki:Service ; ## rdfs:label "SPARQL/Update" ; ## joseki:serviceRef "update/service" ; ## # dataset part ## joseki:dataset <#oracle>; ## # Service part. ## # This processor will not allow either the protocol, ## # nor the query, to specify the dataset. ## joseki:processor joseki:ProcessorSPARQLUpdate ## . ## ## <#serviceRead> ## rdf:type joseki:Service ; ## rdfs:label "SPARQL" ; ## joseki:serviceRef "sparql/read" ; ## # dataset part ## joseki:dataset <#oracle> ; ## Same dataset ## # Service part. ## # This processor will not allow either the protocol, ## # nor the query, to specify the dataset. ## joseki:processor joseki:ProcessorSPARQL_FixedDS ; ## .

After you edit the joseki-config.ttl file, you must restart the Joseki Web application. You can then try a simple update operation, as follows:

In your browser, go to: http://<hostname>:7001/joseki/update.html

Type or paste the following into the text box:

PREFIX : <http://example/>

INSERT DATA {

GRAPH <http://example/g1> { :r :p 455 }

}

Click Perform SPARQL Update.

To verify that the update operation was successful, go to http://<hostname>:7001/joseki and enter the following query:

SELECT *

WHERE

{ GRAPH <http://example/g1> {?s ?p ?o}};

The response should contain the following triple:

<http://example/r> <http://example/p> "455"^^<http://www.w3.org/2001/XMLSchema#decimal>

A SPARQL Update can also be sent using an HTTP POST operation to the http://<hostname>:7001/joseki/update/service. For example, you can use curl (http://en.wikipedia.org/wiki/CURL) to send an HTTP POST request to perform the update operation:

curl --data "request=PREFIX%20%3A%20%3Chttp%3A%2F%2Fexample%2F%3E%20%0AINSERT%20DATA%20%7B%0A%20%20GRAPH%20%3Chttp%3A%2F%2Fexample%2Fg1%3E%20%7B%20%3Ar%20%3Ap%20888%20%7D%0A%7D%0A" http://hostname:7001/joseki/update/service

In the preceding example, the URL encoded string is a simple INSERT operation into a named graph. After decoding, it reads as follows:

PREFIX : <http://example/>

INSERT DATA {

GRAPH <http://example/g1> { :r :p 888 }

You can perform analytical functions on RDF data by using the SemNetworkAnalyst class in the oracle.spatial.rdf.client.jena package. This support integrates the Oracle Spatial network data model (NDM) logic with the underlying RDF data structures. Therefore, to use analytical functions on RDF data, you must be familiar with the Oracle Spatial NDM, which is documented in Oracle Spatial Topology and Network Data Models Developer's Guide.

The required NDM Java libraries, including sdonm.jar and sdoutl.jar, are under the directory $ORACLE_HOME/md/jlib. Note that xmlparserv2.jar (under $ORACLE_HOME/xdk/lib) must be included in the classpath definition.

Example 7-3 uses the SemNetworkAnalyst class, which internally uses the NDM NetworkAnalyst API

Example 7-3 Performing Analytical functions on RDF Data

Oracle oracle = new Oracle(jdbcUrl, user, password);

GraphOracleSem graph = new GraphOracleSem(oracle, modelName);

Node nodeA = Node.createURI("http://A");

Node nodeB = Node.createURI("http://B");

Node nodeC = Node.createURI("http://C");

Node nodeD = Node.createURI("http://D");

Node nodeE = Node.createURI("http://E");

Node nodeF = Node.createURI("http://F");

Node nodeG = Node.createURI("http://G");

Node nodeX = Node.createURI("http://X");

// An anonymous node

Node ano = Node.createAnon(new AnonId("m1"));

Node relL = Node.createURI("http://likes");

Node relD = Node.createURI("http://dislikes");

Node relK = Node.createURI("http://knows");

Node relC = Node.createURI("http://differs");

graph.add(new Triple(nodeA, relL, nodeB));

graph.add(new Triple(nodeA, relC, nodeD));

graph.add(new Triple(nodeB, relL, nodeC));

graph.add(new Triple(nodeA, relD, nodeC));

graph.add(new Triple(nodeB, relD, ano));

graph.add(new Triple(nodeC, relL, nodeD));

graph.add(new Triple(nodeC, relK, nodeE));

graph.add(new Triple(ano, relL, nodeD));

graph.add(new Triple(ano, relL, nodeF));

graph.add(new Triple(ano, relD, nodeB));

// X only likes itself

graph.add(new Triple(nodeX, relL, nodeX));

graph.commitTransaction();

HashMap<Node, Double> costMap = new HashMap<Node, Double>();

costMap.put(relL, Double.valueOf((double)0.5));

costMap.put(relD, Double.valueOf((double)1.5));

costMap.put(relC, Double.valueOf((double)5.5));

graph.setDOP(4); // this allows the underlying LINK/NODE tables

// and indexes to be created in parallel.

SemNetworkAnalyst sna = SemNetworkAnalyst.getInstance(

graph, // network data source

true, // directed graph

true, // cleanup existing NODE and LINK table

costMap

);

psOut.println("From nodeA to nodeC");

Node[] nodeArray = sna.shortestPathDijkstra(nodeA, nodeC);

printNodeArray(nodeArray, psOut);

psOut.println("From nodeA to nodeD");

nodeArray = sna.shortestPathDijkstra( nodeA, nodeD);

printNodeArray(nodeArray, psOut);

psOut.println("From nodeA to nodeF");

nodeArray = sna.shortestPathAStar(nodeA, nodeF);

printNodeArray(nodeArray, psOut);

psOut.println("From ano to nodeC");

nodeArray = sna.shortestPathAStar(ano, nodeC);

printNodeArray(nodeArray, psOut);

psOut.println("From ano to nodeX");

nodeArray = sna.shortestPathAStar(ano, nodeX);

printNodeArray(nodeArray, psOut);

graph.close();

oracle.dispose();

...

...

// A helper function to print out a path

public static void printNodeArray(Node[] nodeArray, PrintStream psOut)

{

if (nodeArray == null) {

psOut.println("Node Array is null");

return;

}

if (nodeArray.length == 0) {psOut.println("Node Array is empty"); }

int iFlag = 0;

psOut.println("printNodeArray: full path starts");

for (int iHops = 0; iHops < nodeArray.length; iHops++) {

psOut.println("printNodeArray: full path item " + iHops + " = "

+ ((iFlag == 0) ? "[n] ":"[e] ") + nodeArray[iHops]);

iFlag = 1 - iFlag;

}

}

In Example 7-3:

A GraphOracleSem object is constructed and a few triples are added to the GraphOracleSem object. These triples describe several individuals and their relationships including likes, dislikes, knows, and differs.

A cost mapping is constructed to assign a numeric cost value to different links/predicates (of the RDF graph). In this case, 0.5, 1.5, and 5.5 are assigned to predicates likes, dislikes, and differs, respectively. This cost mapping is optional. If the mapping is absent, then all predicates will be assigned the same cost 1. When cost mapping is specified, this mapping does not need to be complete; for predicates not included in the cost mapping, a default value of 1 is assigned.

The output of Example 7-3 is as follows. In this output, the shortest paths are listed for the given start and end nodes. Note that the return value of sna.shortestPathAStar(ano, nodeX) is null because there is no path between these two nodes.

From nodeA to nodeC printNodeArray: full path starts printNodeArray: full path item 0 = [n] http://A ## "n" denotes Node printNodeArray: full path item 1 = [e] http://likes ## "e" denotes Edge (Link) printNodeArray: full path item 2 = [n] http://B printNodeArray: full path item 3 = [e] http://likes printNodeArray: full path item 4 = [n] http://C From nodeA to nodeD printNodeArray: full path starts printNodeArray: full path item 0 = [n] http://A printNodeArray: full path item 1 = [e] http://likes printNodeArray: full path item 2 = [n] http://B printNodeArray: full path item 3 = [e] http://likes printNodeArray: full path item 4 = [n] http://C printNodeArray: full path item 5 = [e] http://likes printNodeArray: full path item 6 = [n] http://D From nodeA to nodeF printNodeArray: full path starts printNodeArray: full path item 0 = [n] http://A printNodeArray: full path item 1 = [e] http://likes printNodeArray: full path item 2 = [n] http://B printNodeArray: full path item 3 = [e] http://dislikes printNodeArray: full path item 4 = [n] m1 printNodeArray: full path item 5 = [e] http://likes printNodeArray: full path item 6 = [n] http://F From ano to nodeC printNodeArray: full path starts printNodeArray: full path item 0 = [n] m1 printNodeArray: full path item 1 = [e] http://dislikes printNodeArray: full path item 2 = [n] http://B printNodeArray: full path item 3 = [e] http://likes printNodeArray: full path item 4 = [n] http://C From ano to nodeX Node Array is null

The underlying RDF graph view (SEMM_<model_name> or RDFM_<model_name>) cannot be used directly by NDM functions, and so SemNetworkAnalyst creates necessary tables that contain the nodes and links that are derived from a given RDF graph. These tables are not updated automatically when the RDF graph changes; rather, you can set the cleanup parameter in SemNetworkAnalyst.getInstance to true, to remove old node and link tables and to rebuild updated tables.

Example 7-4 implements the NDM' nearestNeighbors function on top of semantic data. This gets a NetworkAnalyst object from the SemNetworkAnalyst instance, gets the node ID, creates PointOnNet objects, and processes LogicalSubPath objects.

Example 7-4 Implementing NDM nearestNeighbors Function on Top of Semantic Data

%cat TestNearestNeighbor.java

import java.io.*;

import java.util.*;

import com.hp.hpl.jena.query.*;

import com.hp.hpl.jena.rdf.model.Model;

import com.hp.hpl.jena.util.FileManager;

import com.hp.hpl.jena.util.iterator.*;

import com.hp.hpl.jena.graph.*;

import com.hp.hpl.jena.update.*;

import com.hp.hpl.jena.sparql.core.DataSourceImpl;

import oracle.spatial.rdf.client.jena.*;

import oracle.spatial.rdf.client.jena.SemNetworkAnalyst;

import oracle.spatial.network.lod.LODGoalNode;

import oracle.spatial.network.lod.LODNetworkConstraint;

import oracle.spatial.network.lod.NetworkAnalyst;

import oracle.spatial.network.lod.PointOnNet;

import oracle.spatial.network.lod.LogicalSubPath;

/**

* This class implements a nearestNeighbors function on top of semantic data

* using public APIs provided in SemNetworkAnalyst and Oracle Spatial NDM

*/

public class TestNearestNeighbor

{

public static void main(String[] args) throws Exception

{

String szJdbcURL = args[0];

String szUser = args[1];

String szPasswd = args[2];

PrintStream psOut = System.out;

Oracle oracle = new Oracle(szJdbcURL, szUser, szPasswd);

String szModelName = "test_nn";

// First construct a TBox and load a few axioms

ModelOracleSem model = ModelOracleSem.createOracleSemModel(oracle, szModelName);

String insertString =

" PREFIX my: <http://my.com/> " +

" INSERT DATA " +

" { my:A my:likes my:B . " +

" my:A my:likes my:C . " +

" my:A my:knows my:D . " +

" my:A my:dislikes my:X . " +

" my:A my:dislikes my:Y . " +

" my:C my:likes my:E . " +

" my:C my:likes my:F . " +

" my:C my:dislikes my:M . " +

" my:D my:likes my:G . " +

" my:D my:likes my:H . " +

" my:F my:likes my:M . " +

" } ";

UpdateAction.parseExecute(insertString, model);

GraphOracleSem g = model.getGraph();

g.commitTransaction();

g.setDOP(4);

HashMap<Node, Double> costMap = new HashMap<Node, Double>();

costMap.put(Node.createURI("http://my.com/likes"), Double.valueOf(1.0));

costMap.put(Node.createURI("http://my.com/dislikes"), Double.valueOf(4.0));

costMap.put(Node.createURI("http://my.com/knows"), Double.valueOf(2.0));

SemNetworkAnalyst sna = SemNetworkAnalyst.getInstance(

g, // source RDF graph

true, // directed graph

true, // cleanup old Node/Link tables

costMap

);

Node nodeStart = Node.createURI("http://my.com/A");

long origNodeID = sna.getNodeID(nodeStart);

long[] lIDs = {origNodeID};

// translate from the original ID

long nodeID = (sna.mapNodeIDs(lIDs))[0];

NetworkAnalyst networkAnalyst = sna.getEmbeddedNetworkAnalyst();

LogicalSubPath[] lsps = networkAnalyst.nearestNeighbors(

new PointOnNet(nodeID), // startPoint

6, // numberOfNeighbors

1, // searchLinkLevel

1, // targetLinkLevel

(LODNetworkConstraint) null, // constraint

(LODGoalNode) null // goalNodeFilter

);

if (lsps != null) {

for (int idx = 0; idx < lsps.length; idx++) {

LogicalSubPath lsp = lsps[idx];

Node[] nodePath = sna.processLogicalSubPath(lsp, nodeStart);

psOut.println("Path " + idx);

printNodeArray(nodePath, psOut);

}

}

g.close();

sna.close();

oracle.dispose();

}

public static void printNodeArray(Node[] nodeArray, PrintStream psOut)

{

if (nodeArray == null) {

psOut.println("Node Array is null");

return;

}

if (nodeArray.length == 0) {

psOut.println("Node Array is empty");

}

int iFlag = 0;

psOut.println("printNodeArray: full path starts");

for (int iHops = 0; iHops < nodeArray.length; iHops++) {

psOut.println("printNodeArray: full path item " + iHops + " = "

+ ((iFlag == 0) ? "[n] ":"[e] ") + nodeArray[iHops]);

iFlag = 1 - iFlag;

}

}

}

The output of Example 7-4 is as follows.

Path 0 printNodeArray: full path starts printNodeArray: full path item 0 = [n] http://my.com/A printNodeArray: full path item 1 = [e] http://my.com/likes printNodeArray: full path item 2 = [n] http://my.com/C Path 1 printNodeArray: full path starts printNodeArray: full path item 0 = [n] http://my.com/A printNodeArray: full path item 1 = [e] http://my.com/likes printNodeArray: full path item 2 = [n] http://my.com/B Path 2 printNodeArray: full path starts printNodeArray: full path item 0 = [n] http://my.com/A printNodeArray: full path item 1 = [e] http://my.com/knows printNodeArray: full path item 2 = [n] http://my.com/D Path 3 printNodeArray: full path starts printNodeArray: full path item 0 = [n] http://my.com/A printNodeArray: full path item 1 = [e] http://my.com/likes printNodeArray: full path item 2 = [n] http://my.com/C printNodeArray: full path item 3 = [e] http://my.com/likes printNodeArray: full path item 4 = [n] http://my.com/E Path 4 printNodeArray: full path starts printNodeArray: full path item 0 = [n] http://my.com/A printNodeArray: full path item 1 = [e] http://my.com/likes printNodeArray: full path item 2 = [n] http://my.com/C printNodeArray: full path item 3 = [e] http://my.com/likes printNodeArray: full path item 4 = [n] http://my.com/F Path 5 printNodeArray: full path starts printNodeArray: full path item 0 = [n] http://my.com/A printNodeArray: full path item 1 = [e] http://my.com/knows printNodeArray: full path item 2 = [n] http://my.com/D printNodeArray: full path item 3 = [e] http://my.com/likes printNodeArray: full path item 4 = [n] http://my.com/H

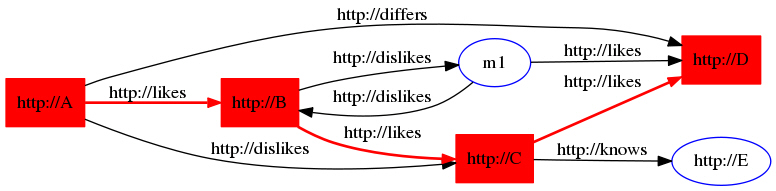

It is sometimes useful to see contextual information about a path in a graph, in addition to the path itself. The buildSurroundingSubGraph method in the SemNetworkAnalyst class can output a DOT file (graph description language file, extension .gv) into the specified Writer object. For each node in the path, up to ten direct neighbors are used to produce a surrounding subgraph for the path. Example 7-5 shows the usage, specifically the output from the analytical functions used in Example 7-3, "Performing Analytical functions on RDF Data".

Example 7-5 Generating a DOT File with Contextual Information

nodeArray = sna.shortestPathDijkstra(nodeA, nodeD);

printNodeArray(nodeArray, psOut);

FileWriter dotWriter = new FileWriter("Shortest_Path_A_to_D.gv");

sna.buildSurroundingSubGraph(nodeArray, dotWriter);

The generated output DOT file from Example 7-5 is straightforward, as shown in the following example:

% cat Shortest_Path_A_to_D.gv

digraph { rankdir = LR; charset="utf-8";

"Rhttp://A" [ label="http://A" shape=rectangle,color=red,style = filled, ];

"Rhttp://B" [ label="http://B" shape=rectangle,color=red,style = filled, ];

"Rhttp://A" -> "Rhttp://B" [ label="http://likes" color=red, style=bold, ];

"Rhttp://C" [ label="http://C" shape=rectangle,color=red,style = filled, ];

"Rhttp://A" -> "Rhttp://C" [ label="http://dislikes" ];

"Rhttp://D" [ label="http://D" shape=rectangle,color=red,style = filled, ];

"Rhttp://A" -> "Rhttp://D" [ label="http://differs" ];

"Rhttp://B" -> "Rhttp://C" [ label="http://likes" color=red, style=bold, ];

"Rm1" [ label="m1" shape=ellipse,color=blue, ];

"Rhttp://B" -> "Rm1" [ label="http://dislikes" ];

"Rm1" -> "Rhttp://B" [ label="http://dislikes" ];

"Rhttp://C" -> "Rhttp://D" [ label="http://likes" color=red, style=bold, ];

"Rhttp://E" [ label="http://E" shape=ellipse,color=blue, ];

"Rhttp://C" -> "Rhttp://E" [ label="http://knows" ];

"Rm1" -> "Rhttp://D" [ label="http://likes" ];

}

You can also use methods in the SemNetworkAnalyst and GraphOracleSem classes to produce more sophisticated visualization of the analytical function output.

You can convert the preceding DOT file into a variety of image formats. Figure 7-1 is an image representing the information in the preceding DOT file.

Figure 7-1 Visual Representation of Analytical Function Output

This section describes some of the Oracle Database Semantic Technologies features that are exposed by the Jena Adapter. For comprehensive documentation of the API calls that support the available features, see the Jena Adapter reference information (Javadoc). For additional information about the server-side features exposed by the Jena Adapter, see the relevant chapters in this manual.

Virtual models (explained in Section 1.3.8) are specified in the GraphOracleSem constructor, and they are handled transparently. If a virtual model exists for the model-rulebase combination, it is used in query answering; if such a virtual model does not exist, it is created in the database.

Note:

Virtual model support through the Jena Adapter is available only with Oracle Database Release 11.2 or later.The following example reuses an existing virtual model.

String modelName = "EX";

String m1 = "EX_1";

ModelOracleSem defaultModel =

ModelOracleSem.createOracleSemModel(oracle, modelName);

// create these models in case they don't exist

ModelOracleSem model1 = ModelOracleSem.createOracleSemModel(oracle, m1);

String vmName = "VM_" + modelName;

//create a virtual model containing EX and EX_1

oracle.executeCall(

"begin sem_apis.create_virtual_model(?,sem_models('"+ m1 + "','"+ modelName+"'),null); end;",vmName);

String[] modelNames = {m1};

String[] rulebaseNames = {};

Attachment attachment = Attachment.createInstance(modelNames, rulebaseNames,

InferenceMaintenanceMode.NO_UPDATE, QueryOptions.ALLOW_QUERY_VALID_AND_DUP);

// vmName is passed to the constructor, so GraphOracleSem will use the virtual

// model named vmname (if the current user has read privileges on it)

GraphOracleSem graph = new GraphOracleSem(oracle, modelName, attachment, vmName);

graph.add(Triple.create(Node.createURI("urn:alice"),

Node.createURI("http://xmlns.com/foaf/0.1/mbox"),

Node.createURI("mailto:alice@example")));

ModelOracleSem model = new ModelOracleSem(graph);

String queryString =

" SELECT ?subject ?object WHERE { ?subject ?p ?object } ";

Query query = QueryFactory.create(queryString) ;

QueryExecution qexec = QueryExecutionFactory.create(query, model) ;

try {

ResultSet results = qexec.execSelect() ;

for ( ; results.hasNext() ; ) {

QuerySolution soln = results.nextSolution() ;

psOut.println("soln " + soln);

}

}

finally {

qexec.close() ;

}

OracleUtils.dropSemanticModel(oracle, modelName);

OracleUtils.dropSemanticModel(oracle, m1);

oracle.dispose();

You can also use the GraphOracleSem constructor to create a virtual model, as in the following example:

GraphOracleSem graph = new GraphOracleSem(oracle, modelName, attachment, true);

In this example, the fourth parameter (true) specifies that a virtual model needs to be created for the specified modelName and attachment.

Oracle Database Connection Pooling is provided through the Jena Adapter OraclePool class. Once this class is initialized, it can return Oracle objects out of its pool of available connections. Oracle objects are essentially database connection wrappers. After dispose is called on the Oracle object, the connection is returned to the pool. More information about using OraclePool can be found in the API reference information (Javadoc).

The following example sets up an OraclePool object with five (5) initial connections.

public static void main(String[] args) throws Exception

{

String szJdbcURL = args[0];