| Oracle® Communications Data Model Implementation and Operations Guide Release 11.3.2 E28442-05 |

|

|

PDF · Mobi · ePub |

| Oracle® Communications Data Model Implementation and Operations Guide Release 11.3.2 E28442-05 |

|

|

PDF · Mobi · ePub |

This chapter discusses the ETL (extraction, transformation and loading) programs you use to populate an Oracle Communications Data Model warehouse. It includes the following topics:

ETL for the Foundation Layer of an Oracle Communications Data Model Warehouse

Performing an Initial Load of an Oracle Communications Data Model Warehouse

Refreshing the Data in an Oracle Communications Data Model Warehouse

Managing Errors During Oracle Communications Data Model Intra-ETL Execution

Figure 2-1, "Layers of an Oracle Communications Data Model Warehouse" illustrated the three layers in Oracle Communications Data Model warehouse environment: the optional staging layer, the foundation layer, and the access layer. As illustrated by Figure 4-1, you use two types of ETL (extraction, transformation and loading) to populate these layers:

Source-ETL. ETL that populates the staging layer (if any) and the foundation layer (that is, the base, reference, and lookup tables) with data from the operational system is known as source ETL.

Oracle Communications Data Model does not include source-ETL scripts. Unless you are using an application adapter for Oracle Communications Data Model, you must create source-ETL yourself using your understanding of your operational and other source systems and your customized Oracle Communications Data Model. See "ETL for the Foundation Layer of an Oracle Communications Data Model Warehouse" for more information on creating source-ETL.

Intra-ETL. ETL that populates the access layer (that is, the derived tables, aggregate tables, materialized views, OLAP cubes, and data mining models) using the data in the foundation layer is known as intra-ETL.

Oracle Communications Data Model does include intra-ETL. You can modify the default intra-ETL to populate a customized access layer from a customized foundation layer. See "Customizing Intra-ETL for Oracle Communications Data Model" for more information on the intra-ETL.

ETL that populates the foundation layer of an Oracle Communications Data Model warehouse (that is, the base, reference, and lookup tables) with data from an operational system is known as source-ETL.

You can populate the foundation layer of an Oracle Communications Data Model warehouse in the following ways:

If an application adapter for Oracle Communications Data Model is available for the system from which you want to populate the foundation layer of an Oracle Communications Data Model warehouse, you can use that adapter to populate the foundation layer. For more information, see "Using an Application Adapter to Populate the Foundation Layer".

Write your own source-ETL scripts using Oracle Data Integrator or another ETL tool and then use those scripts to populate the foundation layer. For more information, see "Writing Your Own Source-ETL".

If an Application Adapter for Oracle Communications Data Model is available for the application that populates your Operational system, you use that adapter to populate the foundation layer of your Oracle Communications Data Model warehouse.

If you are not using an application adapter for Oracle Communications Data Model, you must write your own source-ETL scripts using Oracle Data Integrator or another ETL tool or mapping tool.

The following topics provide general information about writing source-ETL:

ETL Architecture for Oracle Communications Data Model Source-ETL

Creating a Source to Target Mapping Document for the Source-ETL

Designing a Plan for Rectifying Source-ETL Data Quality Problems

Keep the following points in mind when designing and writing source-ETL for Oracle Communications Data Model:

You can populate the calendar data by using the calendar population scripts provided with Oracle Communications Data Model and described in Oracle Communications Data Model Reference.

Populate the tables in the following order:

Lookup tables

Reference tables

Base tables

Analyze the tables in one category before loading the tables in the next category (for example, analyze the reference tables before loading the lookup tables). Additionally, you must analyze all of the tables loaded by the source-ETL process before executing the intra-ETL processes).

See:

The topic about analyzing tables, indexes, and clusters in Oracle Database Administrator's Guide.ETL (or EL-T, that is, Extract, Load and Transform) first extracts data from the original sources, assures the quality of the data, cleans the data, and makes the data consistent across the original sources. ETL then populates the physical objects with the "clean" data so that query tools, report writers, dashboards and so on can access the data.

The fundamental services upon which data acquisition is constructed are as follows:

Data sourcing

Data movement

Data transformation

Data loading

From a logical architecture perspective, there are many different ways to configure these building blocks for delivering data acquisition services. The major architectural styles available that cover a range of options to be targeted within a data warehousing architecture include:

Batch Extract, Transform, and Load and Batch Extract, Load, Transform, Load

Batch Extract, Transform and Load (ETL) and Batch Extract, Load, Transform, Load (ELTL) are the traditional architecture's in a data warehouse implementation. The difference between them is where the transformation proceed in or out of the database.

Batch Hybrid Extract, Transform, Load, Transform, Load

Batch Hybrid Extract, Transform, Load, Transform, Load (ETLTL) is a hybrid strategy. This strategy provides the most flexibility to remove hand coding approaches to transformation design, apply a metadata-driven approach, and still be able to leverage the data processing capabilities of the enterprise warehouse. In this targeted design, the transformation processing is first performed outside the warehouse as a pre-processing step before loading the staging tables, and then further transformation processing is performed within the data warehouse before the final load into the target tables.

Real-time Extract, Transform, Load

Real-time Extract, Transform, Load (rETL) is appropriate when service levels for data freshness demand more up-to-date information in the data warehousing environment. In this approach, the OLTP system must actively publish events of interest so that the rETL processes can extract them from a message bus (queue) on a timely basis. A message-based paradigm is used with publish and subscribe message bus structures or point-to-point messaging with reliable queues. In such cases, the staging area can be used as a real-time Operational Data Store, at least for the source concerned, and aggregation could run directly from the Operational Data Store (operational system) to the Access layer, or to the presentation layer in specific cases.

When designing source-ETL for Oracle Communications Data Model, use the architecture that best meets your business needs.

Before you begin building your extract systems, create a logical data interface document that maps the relationship between original source fields and target destination fields in the tables. This document ties the very beginning of the ETL system to the very end.

Columns in the data mapping document are sometimes combined. For example, the source database, table name, and column name could be combined into a single target column. The information within the concatenated column would be delimited with a period. Regardless of the format, the content of the logical data mapping document has been proven to be the critical element required to sufficiently plan ETL processes.

Data cleaning consists of all the steps required to clean and validate the data feeding a table and to apply known business rules to make the data consistent. The perspectives of the cleaning and conforming steps are less about the upside potential of the data and more about containment and control.

There are several potential data quality issues, related to each other, that the staging area needs to handle:

Data Validity: Is the data content and type sufficient to be usable, and as expected (and "profile" in case one uses this advanced option)?

Data Accuracy: correct addresses, correct with respect some "true" standard (or as such defined).

Data Completeness: is all the required data there? What to do when data is missing? What represents the minimum set of required data?

Data Consistency: that is, consistency of the data between the various sources and what rules one applies for inconsistencies.

Data Latency: A sub-part of data consistency, but treated separately because of its importance: when does data arrive, over which period and in which one can we combine, which one not?

Data Reasoning: This is more at reporting level but can be applied at the staging level: Does the data I see make sense from a business perspective? Can I really combine the data as an end-user would expect?

As a consequence, a multi-layer staging is generally required or expected.

If there are data quality problems, then build a plan, in agreement with IT and business users, for how to rectify these problems.

Answer the following questions:

Is data missing?

Is the data wrong or inconsistent?

Should the problem be fixed in the source systems?

Set up the data quality reporting and action program and people responsibility.

Set up the following processes and programs:

Set up a data quality measurement process.

Set up the data quality reporting and action program and people responsibility.

All data movement among ETL processes are composed of jobs. An ETL workflow executes these jobs in the proper sequence and with the necessary dependencies. General ETL tools, such as Oracle Warehouse Builder, support this kind of workflow, job design, and execution control.

Below are some tips when you design ETL jobs and workflow:

Use common structure across all jobs (source system to transformer to target data warehouse).

Have a one-to-one mapping from source to target.

Define one job per Source table.

Apply generic job structure and template jobs to allow for rapid development and consistency.

Use an optimized job design to leverage Oracle load performance based on data volumes.

Design parameterized job to allow for greater control over job performance and behavior.

Maximize Jobs parallelism execution.

Your ETL tool or your developed mapping scripts generate status and error handling tables.

As a general principle, all ETL logs status and errors into a table. You monitor execution status using an ETL tool or by querying this log table directly.

Whether you are developing mapping scripts and loading into a staging layer or directly into the foundation layer the goal is to get the data into the warehouse in the most expedient manner. In order to achieve good performance during the load you must begin by focusing on where the data to be loaded resides and how you load it into the database. For example, you should not use a serial database link or a single JDBC connection to move large volumes of data. The most common and preferred mechanism for loading large volumes of data is loading from flat files.

The following topics discuss best practices for ensuring your source-ETL loads efficiently:

The area where flat files are stored before being loaded into the staging layer of a data warehouse system is commonly known as staging area. The overall speed of your load is determined by:

How quickly the raw data can be read from staging area.

How quickly the raw data can be processed and inserted into the database.

Recommendations: Using a Staging Area

Stage the raw data across as many physical disks as possible to ensure that reading it is not a bottleneck during the load.

Also, if you are using the Exadata Database Machine, the best place to stage the data is in an Oracle Database File System (DBFS) stored on the Exadata storage cells. DBFS creates a mountable cluster file system which can you can use to access files stored in the database. Create the DBFS in a separate database on the Database Machine. This allows the DBFS to be managed and maintained separately from the data warehouse.

Mount the file system using the DIRECT_IO option to avoid thrashing the system page cache while moving the raw data files in and out of the file system.

See:

Oracle Database SecureFiles and Large Objects Developer's Guide for more information on setting up DBFS.In order to parallelize the data load Oracle Database must be able to logically break up the raw data files into chunks, known as granules. To ensure balanced parallel processing, the number of granules is typically much higher than the number of parallel server processes. At any given point in time, a parallel server process is allocated one granule to work on. After a parallel server process completes working on its granule, another granule is allocated until all of the granules are processed and the data is loaded.

Recommendations: Preparing Raw Data Files for Source-ETL

Follow these recommendations:

Deliminate each row using a known character such as a new line or a semicolon. This ensures that Oracle can look inside the raw data file and determine where each row of data begins and ends in order to create multiple granules within a single file.

If a file is not position-able and seek-able (for example the file is compressed or zip file), then the files cannot be broken up into granules and the whole file is treated as a single granule. In this case, only one parallel server process can work on the entire file. In order to parallelize the loading of compressed data files, use multiple compressed data files. The number of compressed data files used determines the maximum parallel degree used by the load.

When loading multiple data files (compressed or uncompressed):

Use a single external table, if at all possible

Make the files similar in size

Make the size of the files a multiple of 10 MB

If you must have files of different sizes, list the files from largest to smallest. By default, Oracle assumes that the flat file has the same character set as the database. If this is not the case, specify the character set of the flat file in the external table definition to ensure the proper character set conversions can take place.

Oracle offers several data loading options

External table or SQL*Loader

Oracle Data Pump (import and export)

Change Data Capture and Trickle feed mechanisms (such as Oracle GoldenGate)

Oracle Database Gateways to open systems and mainframes

Generic Connectivity (ODBC and JDBC)

The approach that you take depends on the source and format of the data you receive.

Recommendations: Loading Flat Files

If you are loading from files into Oracle you have two options: SQL*Loader or external tables.

Using external tables offers the following advantages:

Allows transparent parallelization inside the database.You can avoid staging data and apply transformations directly on the file data using arbitrary SQL or PL/SQL constructs when accessing external tables. SQL Loader requires you to load the data as-is into the database first.

Parallelizing loads with external tables enables a more efficient space management compared to SQL*Loader, where each individual parallel loader is an independent database sessions with its own transaction. For highly partitioned tables this could potentially lead to a lot of wasted space.

You can create an external table using the standard CREATE TABLE statement. However, to load from flat files the statement must include information about where the flat files reside outside the database. The most common approach when loading data from an external table is to issue a CREATE TABLE AS SELECT (CTAS) statement or an INSERT AS SELECT (IAS) statement into an existing table.

A direct path load parses the input data according to the description given in the external table definition, converts the data for each input field to its corresponding Oracle data type, then builds a column array structure for the data. These column array structures are used to format Oracle data blocks and build index keys. The newly formatted database blocks are then written directly to the database, bypassing the standard SQL processing engine and the database buffer cache.

The key to good load performance is to use direct path loads wherever possible:

A CREATE TABLE AS SELECT (CTAS) statement always uses direct path load.

A simple INSERT AS SELECT (IAS) statement does not use direct path load. In order to achieve direct path load with an IAS statement you must add the APPEND hint to the command.

Direct path loads can also run in parallel. To set the parallel degree for a direct path load, either:

Add the PARALLEL hint to the CTAS statement or an IAS statement.

Set the PARALLEL clause on both the external table and the table into which the data is loaded.

After the parallel degree is set:

A CTAS statement automatically performs a direct path load in parallel.

An IAS statement does not automatically perform a direct path load in parallel. In order to enable an IAS statement to perform direct path load in parallel, you must alter the session to enable parallel DML by executing the following statement.

alter session enable parallel DML;

A benefit of partitioning is the ability to load data quickly and easily with minimal impact on the business users by using the EXCHANGE PARTITION command. The EXCHANGE PARTITION command enables swapping the data in a nonpartitioned table into a particular partition in your partitioned table. The EXCHANGE PARTITION command does not physically move data, instead it updates the data dictionary to exchange a pointer from the partition to the table and vice versa.

Because there is no physical movement of data, an exchange does not generate redo and undo. In other words, an exchange is a sub-second operation and far less likely to impact performance than any traditional data-movement approaches such as INSERT.

Recommendations: Partitioning Tables

Partition the larger tables and fact tables in the Oracle Communications Data Model warehouse.

Example 4-1 Using Exchange Partition Statement with a Partitioned Table

Assume that there is a large table called Sales, which is range partitioned by day. At the end of each business day, data from the online sales system is loaded into the Sales table in the warehouse.

The following steps ensure the daily data gets loaded into the correct partition with minimal impact to the business users of the data warehouse and optimal speed:

Create external table for the flat file data coming from the online system

Using a CTAS statement, create a nonpartitioned table called tmp_sales that has the same column structure as Sales table

Build any indexes that are on the Sales table on the tmp_sales table

Issue the EXCHANGE PARTITION command.

Alter table Sales exchange partition p2 with

table top_sales including indexes without validation;

Gather optimizer statistics on the newly exchanged partition using incremental statistics.

The EXCHANGE PARTITION command in this example, swaps the definitions of the named partition and the tmp_sales table, so the data instantaneously exists in the right place in the partitioned table. Moreover, with the inclusion of the INCLUDING INDEXES and WITHOUT VALIDATION clauses, Oracle swaps index definitions and does not check whether the data actually belongs in the partition - so the exchange is very quick.

Note:

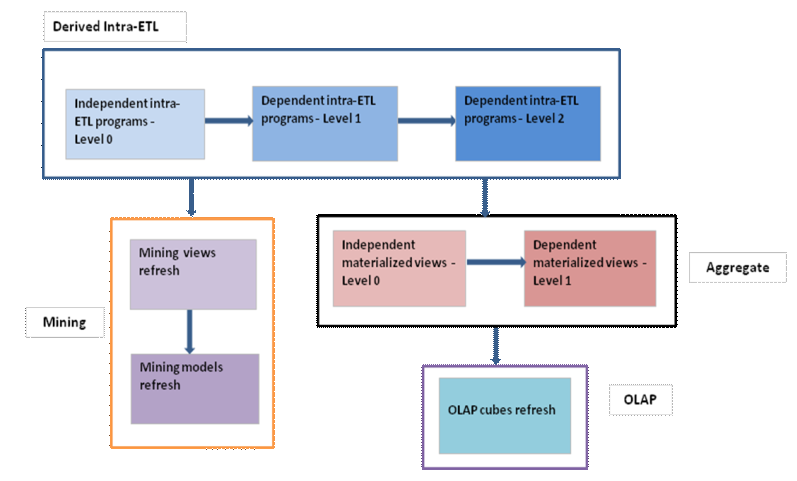

The assumption being made in this example is that the data integrity was verified at date extraction time. If you are unsure about the data integrity, omit theWITHOUT VALIDATION clause so that the Database checks the validity of the data.The Oracle Communications Data Model uses workflow implemented using PL/SQL packages to execute the intra-ETL process. The workflow consists of four major components:

Lookup Values

Executing Derived Intra-ETL Programs:

Independent Derived intra-ETL programs - Level 0

First level dependent Derived intra-ETL programs - Level 1

Second level dependent Derived intra-ETL programs - Level 2

Refreshing Aggregate Materialized Views:

Independent Aggregate materialized views - Level 0

First level dependent Aggregate materialized views - Level 1

Figure 4-2 illustrates the Oracle Communications Data Model intra-ETL workflow.

Figure 4-2 Oracle Communications Data Model Intra-ETL Workflow

Some Intra-ETLs expect some default values in order to work properly. They are usually associated with codes and stored as numbers but saved as text. For example, a typical status code (STAT_CD) is expected to start with 1 for pre-activated status, 2 with active status, 4 with deactivated status, and 5 with canceled status.

The advantage of defining numbers saved as text is that this allows the addition of custom codes that can be associated with an active state (for example 21, 2199, 21000001, and so on) that will be taken into account without having to change anything in the codes.

But of course, you will need to map the original codes to the text values. When you use an ETL lookup Matrix, as table, this allows the identifier of the source system, source table, the source column and the source code, and the Oracle Communications Data Model target table, column, and code.

You can define a simple function to search and leverage the correct code and map it to a number (stored as text to allow the use of SQL TEXT functions). You might want to add Oracle Communications Data Model Lookup tables with a SHORT_NAME column that would represent the original code of the source system, or the one that the end-users use, for reporting purposes only.

An alternative approach is to change the default value in the Intra-ETLs. Note that as soon as multiple sources map to the same Oracle Communications Data Model table, you will need some similar lookup code unification process.

The first workflow component is the Derived intra-ETL programs. This component has three subcomponents that handle the dependency among Derived intra-ETL programs:

Independent Derived intra-ETL programs, the first subcomponent, has Derived intra-ETL programs that get data from foundation layer tables, that is base, lookup, and reference tables.

Derived intra-ETL programs, the second subcomponent, handles parts that depend on the first subcomponent, Independent Derived intra-ETL programs. The second subcomponent intra-ETL programs get data from foundation layer tables, that is base, lookup, and reference tables and also from derived tables that have intra-ETL programs in first subcomponent.

The third subcomponent has Derived intra-ETL programs that depend on the both the first (Independent Derived intra-ETL programs) and the second subcomponents (First level dependent Derived intra-ETL programs). The third subcomponent intra-ETL programs get data from foundation layer tables, that is base, lookup, and reference tables and also from derived tables that have intra-ETL programs in both first and second subcomponents.

Intra-ETL programs in all three subcomponents are implemented using PL/SQL packages. All Intra-ETL packages except two (DWD_CNT_DAY_PKG and DWD_CUST_DNA_PKG) insert data for the ETL period mentioned in DWC_ETL_PARAMETER table for "OCDM-INTRA-ETL" process. The process name for DWD_CNT_DAY table is "DWD_CNT_DAY" and for DWD_CUST_DNA table it is "DWD_CUST_DNA". Modify the ETL period of all three processes according to your data load requirements. If you are trying to load data for ETL period, for which data is already loaded, intra-ETL program first truncates the partitions existing for the ETL period, and then loads data into the target derived table.

Modifying existing or adding new intra-ETLs is a common customization of Oracle Communications Data Model. If new data marts are required or if some existing data warehouse requires modifications, it is usual to either create an Intra-ETL from scratch or to copy an existing Intra-ETL and modify it. In both cases, the new or modified intra-ETL program needs to be added in the Package PKG_INTRA_ETL_PROCESS, and the old one needs to be switched off or commented, at the correct level of dependency.

Be sure to create or modify the target entity as required.

This is the second component of the workflow. This component depends on the first component, "Executing Derived Intra-ETL Programs". The execution of this component happens only when the execution of the first component completes successfully. This component has two subcomponents to deal with the dependency among the Aggregate materialized views:

Independent Aggregate materialized views, the first subcomponent, has aggregate materialized views that do not depend on any other aggregate materialized views and most of them get data from derived tables and reference tables. Whereas few materialized views get data from foundation layer tables and derived tables.

First level dependent Aggregate materialized views, the second component, has aggregate materialized views that depend on the first subcomponent, Independent Aggregate materialized views. The aggregate materialized views in this subcomponent get data from aggregate materialized views in first the subcomponent

Modifications or additions in this layer follow the same principle as the ones in the derived layer.

This is the third component of the workflow. This component depends on the first component, "Executing Derived Intra-ETL Programs". The execution of this component happens only when the execution of the first component completes successfully. This component refreshes data mining models based on the training day and apply day specified in ETL parameter table, DWC_ETL_PARAMETER table for BUILD-MINING-MODELS process.

The creation of new mining models or the adaptation of existing mining models for a specific business need could be seen as typical "customization" (or configuration in case of existing models). One should follow the standard mining process as described in the specific documentation for the Advanced Analytics option of the database (because data mining is a process as such, before being automated as part of the customized Intra-ETL processes).

Additional models, once finalized, should be seen as normal personalization of Oracle Communications Data Model to one's business.

As your model changes over time any customized models need to be reviewed and fine tuned to conform and provide useful information with the new data available, as part of the standard reprocessing of the mining models on a regular basis. It is usual to re-run and fine tune any given mining model at least every quarter, to make sure the current mining model takes into account any new trends from available data.

The mining intra-ETLs should be customized to correspondingly consider any model data additions or changes.

This is the fourth component of the workflow. This component depends on the second component, "Refreshing Aggregate Materialized Views", which in turn depends on the first component, "Executing Derived Intra-ETL Programs". The execution of this component happens only when the execution of the second component completes successfully. This component refreshes data in OLAP cubes and dimensions based on the parameters given in DWC_OLAP_ETL_PARAMETER table.

Similarly to data mining and aggregate customization, when you add a cube or change an existing cube is part of typical customization of Oracle Communications Data Model, your refresh must follows the same process as a cube creation or modification as described in the Oracle OLAP User's Guide. The Intra-ETL that fills the cubes should be correspondingly modified.

Oracle Communications Data Model intra-ETL workflow is implemented using a PL/SQL package, PKG_INTRA_ETL_PROCESS. Each component and their subcomponents of intra-ETL workflow have one procedure each. All these procedures are private to the package. The package has only one public procedure, which invokes all the private procedures as depicted in Figure 4-2. Before executing the workflow, ensure that you set all ETL parameters in DWC_OLAP_PARAMETER and DWC_OLAP_ETL_PARAMETER tables. Invoking PKG_INTRA_ETL_PROCESS.RUN procedure starts the workflow execution.

Performing an initial load of an Oracle Communications Data Model is a multistep process:

Load the foundation layer of the Oracle Communications Data Model warehouse (that is, the reference, lookup, and base tables) as described in "Performing an Initial Load of the Foundation Layer".

Load the access layer of the Oracle Communications Data Model warehouse (that is, the derived and aggregate tables, materialized views, OLAP cubes, and data mining models) as described in "Performing an Initial Load of the Access Layer".

The manner in which you perform an initial load of the foundation layer of an Oracle Communications Data Model warehouse (that is, the reference, lookup, and base tables) varies depending on whether you are using an application adapter for Oracle Communications Data Model:

If you are using an application adapter for Oracle Communications Data Model, then you use that adapter to load the foundation layer. For example, you can use the NCC Adapter for Oracle Communications Data Model to populate the foundation layer of an Oracle Communications Data Model warehouse with data from an Oracle Communications Network Charging and Control system.

If you are not using an application adapter, then you perform the initial load of the foundation layer using source-ETL that you create. See "Writing Your Own Source-ETL" for more information on creating this ETL.

To perform an initial load of access layer of the Oracle Communications Data Model warehouse (that is, the derived and aggregate tables, materialized views, OLAP cubes, and data mining models) take the following steps:

Update the parameters in DWC_ETL_PARAMETER control table in the ocdm_sys schema for different processes so that the ETL can use this information (that is, the beginning and end date of the ETL period) when loading the derived and aggregate tables and views.

For an initial load of an Oracle Communications Data Model warehouse, specify the values shown in the following tables:

For OCDM-INTRA-ETL process:

| Columns | Value |

|---|---|

PROCESS_NAME |

'OCDM-INTRA-ETL' |

FROM_DATE_ETL |

The beginning date of the ETL period. |

TO_DATE_ETL |

The ending date of the ETL period. |

For DWD_CUST_DNA process:

| Columns | Value |

|---|---|

PROCESS_NAME |

'DWD_CUST_DNA' |

FROM_DATE_ETL |

The beginning date of the ETL period. |

TO_DATE_ETL |

The ending date of the ETL period. |

For DWD_CNT_DAY process:

| Columns | Value |

|---|---|

PROCESS_NAME |

'DWD_CNT_DAY' |

FROM_DATE_ETL |

The beginning date of the ETL period. |

TO_DATE_ETL |

The ending date of the ETL period. |

For OCDM-DWA-MV-DATE process:

| Columns | Value |

|---|---|

PROCESS_NAME |

' OCDM-DWA-MV-DATE' |

FROM_DATE_ETL |

The beginning date of the ETL period. |

TO_DATE_ETL |

The ending date of the ETL period. |

For BUILD-MINING-MODELS process:

| Columns | Value |

|---|---|

PROCESS_NAME |

'BUILD-MINING-MODELS' |

FROM_DATE_ETL |

The beginning date of the ETL period. |

TO_DATE_ETL |

The ending date of the ETL period. |

For more information on DWC_ETL_PARAMETER control table, see Oracle Communications Data Model Reference.

Update the Oracle Communications Data Model OLAP ETL parameters in DWC_OLAP_ETL_PARAMETER control table in the ocdm_sys schema to specify the build method and other build characteristics so that the ETL can use this information when loading the OLAP cube data.

For an initial load of the analytic workspace, specify values following the guidelines in Table 4-1.

Table 4-1 Values of OLAP ETL Parameters in the DWC_OLAP_ETL_PARAMETER table for Initial Load

| Column Name | Value |

|---|---|

|

|

' |

|

|

C which specifies a complete refresh which clears all dimension values before loading. |

|

|

One of the following values that specifies the cubes you want to build:

|

|

|

A decimal value that specifies the number of parallel processes to allocate to this job. (Default value is 4.) The value that you specify varies depending on the setting of the JOB_QUEUE_PROCESSES database initialization parameter. |

|

|

One of the following values depending on whether you want to calculate forecast cubes:

|

|

|

If the value for the |

|

|

If the value for the |

|

|

If the value for the |

|

|

If the value for the |

|

|

Specify |

|

|

Specify |

Execute the intra-ETL as described in "Executing the Default Oracle Communications Data Model Intra-ETL".

The intra-ETL workflow is implemented using PL/SQL package, PKG_INTRA_ETL_PROCESS. This package has a public procedure, Run, and also has private procedures for executing derived intra-ETL programs, refreshing aggregate materialized views, refreshing data mining models, and refreshing OLAP cubes. The public procedure, Run, invokes all the private procedures.

Before executing intra-ETL workflow, update ETL parameters in DWC_ETL_PARAMETER and DWC_OLAP_ETL_PARAMETER tables. It is suggested to not use ocdm_sys user to update ETL parameter tables and executing intra-ETL workflow. Ask your DBA to create a user for performing these tasks using following commands:

CREATE USER ocdm_user IDENTIFIED BY ocdm_user; GRANT CREATE SESSION TO ocdm_user; GRANT ALTER SESSION TO ocdm_user; GRANT EXECUTE ON ocdm_sys.PKG_INTRA_ETL_PROCESS TO ocdm_user; GRANT EXECUTE ON ocdm_sys.PKG_INTRA_ETL_UTIL TO ocdm_user; GRANT SELECT,UPDATE ON ocdm_sys.DWC_ETL_PARAMETER TO ocdm_user; GRANT SELECT ON ocdm_sys.DWC_INTRA_ETL_ACTIVITY TO ocdm_user; GRANT SELECT ON ocdm_sys.DWC_INTRA_ETL_PROCESS TO ocdm_user; GRANT SELECT,UPDATE ON ocdm_sys.DWC_OLAP_ETL_PARAMETER TO ocdm_user; GRANT SELECT ON ocdm_sys.DWC_OLAP_ACTIVITY TO ocdm_user; GRANT SELECT ON ocdm_sys.DWC_MESSAGE TO ocdm_user;

Use ocdm_user user to update ETL parameter tables and execute intra-ETL workflow. In a SQLPLUS session, connect to ocdm_user user:

sqlplus ocdm_user/ocdm_user@SID

Update ETL parameter tables:

SQL> UPDATE DWC_ETL_PARAMETER

SET from_date_etl = < The beginning date of the ETL period >,

to_date_etl = < The ending date of the ETL period >

WHERE process_name = 'OCDM-INTRA-ETL'

;

/

SQL> commit;

SQL> UPDATE DWC_ETL_PARAMETER

SET from_date_etl = < The beginning date of the ETL period >,

to_date_etl = < The ending date of the ETL period >

WHERE process_name = 'DWD_CUST_DNA'

;

/

SQL> commit;

SQL> UPDATE DWC_ETL_PARAMETER

SET from_date_etl = < The beginning date of the ETL period >,

to_date_etl = < The ending date of the ETL period >

WHERE process_name = 'DWD_CNT_DAY'

;

/

SQL> commit;

SQL> UPDATE DWC_ETL_PARAMETER

SET from_date_etl = < The beginning date of the ETL period >,

to_date_etl = < The ending date of the ETL period >

WHERE process_name = 'OCDM-DWA-MV-DATE'

;

/

SQL> commit;

SQL> UPDATE DWC_ETL_PARAMETER

SET from_date_etl = < The beginning date of the ETL period >,

to_date_etl = < The ending date of the ETL period >

WHERE process_name = 'BUILD-MINING-MODELS'

;

/

SQL> commit;

SQL> UPDATE DWC_OLAP_ETL_PARAMETER

SET build_method = <>,

cubename = <>,

.

.

.

.

fcst_st_yr = <>,

fcst_end_yr = <>

;

/

SQL> commit;

Run the following command to execute intra-ETL workflow:

SQL> BEGIN OCDM_SYS.PKG_INTRA_ETL_PROCESS.Run; END; /

The status of each activity is tracked using DWC_INTRA_ETL_ACTIVITY table. The status of each cube data loading is tracked using DWC_OLAP_ACTIVITY table. The status of the entire intra-ETL workflow process is tracked using DWC_INTRA_ETL_PROCESS table. See "Monitoring the Execution of the Intra-ETL Process" for more information on these tables.

The section, "Performing an Initial Load of the Access Layer" describes how to perform an initial load of an Oracle Communications Data Model data warehouse. After this initial load, you must load new data into your Oracle Communications Data Model data warehouse regularly so that it can serve its purpose of facilitating business analysis.

To load new data into your Oracle Communications Data Model warehouse, you extract the data from one or more operational systems and copy that data into the warehouse. The challenge in data warehouse environments is to integrate, rearrange and consolidate large volumes of data over many systems, thereby providing a new unified information base for business intelligence.

The successive loads and transformations must be scheduled and processed in a specific order that is determined by your business needs. Depending on the success or failure of the operation or parts of it, the result must be tracked and subsequent, alternative processes might be started.

You can do a full incremental load of the Oracle Communications Data Model warehouse, or you can refresh the data sequentially, as follows:

Refreshing the Foundation Layer of Oracle Communications Data Model Warehouse

Refreshing the Access Layer of an Oracle Communications Data Model Warehouse

In either case, you can manage errors during the execution of the intra-ETL as described in .

You can refresh the foundation layer of an Oracle Communications Data Model warehouse (that is, the reference, lookup, and base tables) in the following ways:

If an application adapter for Oracle Communications Data Model is available for the system from which you want to refresh the foundation layer of an Oracle Communications Data Model warehouse, you can use that adapter to refresh the foundation layer.

You can refresh the foundation layer using source-ETL scripts that you wrote using Oracle Warehouse Builder or another ETL tool. For more information on creating source-ETL, see "Writing Your Own Source-ETL".

Refreshing the access layer of an Oracle Communications Data Model is a multi-step process. You can do a full incremental load of the access layer all at one time, or you can refresh the data sequentially, as follows:

Refreshing Oracle Communications Data Model Derived Tables

Refreshing Oracle Communications Data Model Aggregate Materialized Views

Refreshing Oracle Communications Data Model OLAP Cubes

Refreshing Oracle Communications Data Model Data Mining Models

In either case, you can manage errors during the execution of the intra-ETL as described in "Managing Errors During Oracle Communications Data Model Intra-ETL Execution".

To accomplish incremental loading of Oracle Communications Data Model data warehouse, ask your DBA to grant execute privilege on Derived intra-ETL, OLAP ETL, and Mining PL/SQL packages:

GRANT EXECUTE ON ocdm_sys.DWD_ACCT_BAL_MO_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_ACCT_DEBT_MO_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_ACCT_FRST_ACTVTY_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_ACCT_LAST_ACTVTY_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_ACCT_PYMT_DAY_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_ACCT_PMT_MTD_STAT_HST_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_AGRMNT_CHNG_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_AGRMNT_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_CANBLZTN_DTL_DAY_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_CMPGN_HIST_DAY_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_CNT_DAY_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_CNTCT_CNTR_DAY_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_CUST_EQPMNT_INSLTN_DAY_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_CUST_ORDR_DAY_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_CUST_ORDR_LN_ITEM_DAY_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_CUST_RFMP_SCR_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_CUST_SKU_SL_RETRN_DAY_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_DATA_USG_DAY_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_GIVE_AWAY_ITEM_DAY_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_INV_ADJ_ITEM_DAY_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_INV_POSN_ITEM_DAY_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_INV_RCPT_ITEM_DAY_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_INV_UNAVL_ITEM_DAY_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_INV_XFER_ITEM_DAY_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_INVC_AGNG_DAY_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_INVC_DAY_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_LYLTY_MBR_PNT_DAY_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_NBR_PRT_DAY_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_POS_TNDR_FLOW_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_PRCS_INVC_DAY_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_RTL_SL_RETRN_ITEM_DAY_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_SPLMNTR_SRVC_USG_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_SRVC_PRBLM_DAY_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_STORE_EFFNCY_DAY_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_VAS_SBRP_QCK_SUMM_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_VAS_USG_DAY_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_VOI_CALL_DAY_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_AGRMNT_RVN_DAY_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_PRPD_ACCT_STTSTC_DAY_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_RVN_DAY_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.DWD_CUST_DNA_PKG TO ocdm_user; GRANT EXECUTE ON ocdm_sys.PKG_MINING_ETL TO ocdm_user; GRANT EXECUTE ON ocdm_sys.PKG_OCDM_MINING TO ocdm_user; GRANT EXECUTE ON ocdm_sys.PKG_OCDM_OLAP_ETL_AW_LOAD TO ocdm_user;

Refreshing the relational tables in an Oracle Communications Data Model is a multi-step process:

Refresh the foundation layer of the Oracle Communications Data Model warehouse (that is, the reference, lookup, and base tables) with operational system data by executing the source-ETL that you have written.

Update the parameters of the DWC_ETL_PARAMETER control table for three processes('OCDM-INTRA-ETL', 'DWD_CUST_DNA', 'DWD_CNT_DAY'). Please refer to "Performing an Initial Load of an Oracle Communications Data Model Warehouse" for more information on the DWC_ETL_PARAMETER table. For an incremental load of an Oracle Communications Data Model warehouse, specify the values shown in the following table (that is, the beginning and end date of the ETL period) for all three processes

| Columns | Value |

|---|---|

| FROM_DATE_ETL | The beginning date of the ETL period. |

| TO_DATE_ETL | The ending date of the ETL period. |

For more information on DWC_ETL_PARAMETER control table, see Oracle Communications Data Model Reference.

Create a session by connecting ocdm_user user through SQLPLUS. Then, start an intra-ETL process. Make sure the previous process ended with 'COMPLETED-SUCCESS' status before starting a new process:

sqlplus ocdm_user/ocdm_user@SID SQL> DECLARE l_process_type OCDM_SYS.DWC_INTRA_ETL_PROCESS.PROCESS_TYPE%TYPE; l_error_text OCDM_SYS.DWC_MESSAGE.MESSAGE_TEXT%TYPE; l_process_no NUMBER; BEGIN l_process_no := OCDM_SYS.PKG_INTRA_ETL_UTIL.Start_Process(l_process_type,l_error_text); END; /

Refresh Oracle Communications Data Model derived tables by executing following commands:

SQL> DECLARE

p_process_no NUMBER;

l_status VARCHAR2(20);

BEGIN

l_status := OCDM_SYS.DWD_ACCT_BAL_MO_PKG.Load('DWD_ACCT_BAL_MO',p_process_no);

l_status := OCDM_SYS.DWD_ACCT_DEBT_MO_PKG.Load('DWD_ACCT_DEBT_MO',p_process_no);

l_status := OCDM_SYS.DWD_ACCT_FRST_ACTVTY_PKG.Load('DWD_ACCT_FRST_ACTVTY',p_process_no);

l_status := OCDM_SYS.DWD_ACCT_LAST_ACTVTY_PKG.Load('DWD_ACCT_LAST_ACTVTY',p_process_no);

l_status := OCDM_SYS.DWD_ACCT_PYMT_DAY_PKG.Load('DWD_ACCT_PYMT_DAY',p_process_no);

l_status := OCDM_SYS.DWD_ACCT_PMT_MTD_STAT_HST_PKG.Load('DWD_ACCT_PYMT_MTHD_STAT_HIST',p_process_no);

l_status := OCDM_SYS.DWD_AGRMNT_CHNG_PKG.Load('DWD_AGRMNT_CHNG',p_process_no);

l_status := OCDM_SYS.DWD_AGRMNT_PKG.Load('DWD_AGRMNT',p_process_no);

l_status := OCDM_SYS.DWD_CANBLZTN_DTL_DAY_PKG.Load('DWD_CANBLZTN_DTL_DAY',p_process_no);

l_status := OCDM_SYS.DWD_CMPGN_HIST_DAY_PKG.Load('DWD_CMPGN_HIST_DAY',p_process_no);

l_status := OCDM_SYS.DWD_CNT_DAY_PKG.Load('DWD_CNT_DAY',p_process_no);

l_status := OCDM_SYS.DWD_CNTCT_CNTR_DAY_PKG.Load('DWD_CNTCT_CNTR_DAY',p_process_no);

l_status := OCDM_SYS.DWD_CUST_EQPMNT_INSLTN_DAY_PKG.Load('DWD_CUST_EQPMNT_INSTLTN_DAY',p_process_no);

l_status := OCDM_SYS.DWD_CUST_ORDR_DAY_PKG.Load('DWD_CUST_ORDR_DAY',p_process_no);

l_status := OCDM_SYS.DWD_CUST_ORDR_LN_ITEM_DAY_PKG.Load('DWD_CUST_ORDR_LN_ITEM_DAY',p_process_no);

l_status := OCDM_SYS.DWD_CUST_RFMP_SCR_PKG.Load('DWD_CUST_RFMP_SCR',p_process_no);

l_status := OCDM_SYS.DWD_CUST_SKU_SL_RETRN_DAY_PKG.Load('DWD_CUST_SKU_SL_RETRN_DAY',p_process_no);

l_status := OCDM_SYS.DWD_DATA_USG_DAY_PKG.Load('DWD_DATA_USG_DAY',p_process_no);

l_status := OCDM_SYS.DWD_GIVE_AWAY_ITEM_DAY_PKG.Load('DWD_GIVE_AWAY_ITEM_DAY',p_process_no);

l_status := OCDM_SYS.DWD_INV_ADJ_ITEM_DAY_PKG.Load('DWD_INV_ADJ_ITEM_DAY',p_process_no);

l_status := OCDM_SYS.DWD_INV_POSN_ITEM_DAY_PKG.Load('DWD_INV_POSN_ITEM_DAY',p_process_no);

l_status := OCDM_SYS.DWD_INV_RCPT_ITEM_DAY_PKG.Load('DWD_INV_RCPT_ITEM_DAY',p_process_no);

l_status := OCDM_SYS.DWD_INV_UNAVL_ITEM_DAY_PKG.Load('DWD_INV_UNAVL_ITEM_DAY',p_process_no);

l_status := OCDM_SYS.DWD_INV_XFER_ITEM_DAY_PKG.Load('DWD_INV_XFER_ITEM_DAY',p_process_no);

l_status := OCDM_SYS.DWD_INVC_AGNG_DAY_PKG.Load('DWD_INVC_AGNG_DAY',p_process_no);

l_status := OCDM_SYS.DWD_INVC_DAY_PKG.Load('DWD_INVC_DAY',p_process_no);

l_status := OCDM_SYS.DWD_LYLTY_MBR_PNT_DAY_PKG.Load('DWD_LYLTY_MBR_PNT_DAY',p_process_no);

l_status := OCDM_SYS.DWD_NBR_PRT_DAY_PKG.Load('DWD_NBR_PRT_DAY',p_process_no);

l_status := OCDM_SYS.DWD_POS_TNDR_FLOW_PKG.Load('DWD_POS_TNDR_FLOW',p_process_no);

l_status := OCDM_SYS.DWD_PRCS_INVC_DAY_PKG.Load('DWD_PRCS_INVC_DAY',p_process_no);

l_status := OCDM_SYS.DWD_RTL_SL_RETRN_ITEM_DAY_PKG.Load('DWD_RTL_SL_RETRN_ITEM_DAY',p_process_no);

l_status := OCDM_SYS.DWD_SPLMNTR_SRVC_USG_PKG.Load('DWD_SPLMNTR_SRVC_USG',p_process_no);

l_status := OCDM_SYS.DWD_SRVC_PRBLM_DAY_PKG.Load('DWD_SRVC_PRBLM_DAY',p_process_no);

l_status := OCDM_SYS.DWD_STORE_EFFNCY_DAY_PKG.Load('DWD_STORE_EFFNCY_DAY',p_process_no);

l_status := OCDM_SYS.DWD_VAS_SBRP_QCK_SUMM_PKG.Load('DWD_VAS_SBRP_QCK_SUMM',p_process_no);

l_status := OCDM_SYS.DWD_VAS_USG_DAY_PKG.Load('DWD_VAS_USG_DAY',p_process_no);

l_status := OCDM_SYS.DWD_VOI_CALL_DAY_PKG.Load('DWD_VOI_CALL_DAY',p_process_no);

END;

/

SQL> DECLARE

p_process_no NUMBER;

l_status VARCHAR2(20);

BEGIN

l_status := OCDM_SYS.DWD_AGRMNT_RVN_DAY_PKG.Load('DWD_AGRMNT_RVN_DAY',p_process_no);

l_status := OCDM_SYS.DWD_PRPD_ACCT_STTSTC_DAY_PKG.Load('DWD_PRPD_ACCT_STTSTC_DAY',p_process_no);

l_status := OCDM_SYS.DWD_RVN_DAY_PKG.Load('DWD_RVN_DAY',p_process_no);

END;

/

SQL> DECLARE

p_process_no NUMBER;

l_status VARCHAR2(20);

BEGIN

l_status := OCDM_SYS.DWD_CUST_DNA_PKG.Load('DWD_CUST_DNA',p_process_no);

END;

/

Refreshing the Aggregate Materialized Views in an Oracle Communications Data Model is a multi-step process:

Refresh the foundation layer of the Oracle Communications Data Model warehouse (that is, the reference, lookup, and base tables) with operational system data by executing the source-ETL that you have written.

Refresh Oracle Communications Data Model derived tables as explained in Refreshing Oracle Communications Data Model Derived Tables.

Update the parameters of the DWC_ETL_PARAMETER control table for OCDM-DWA-MV-DATE process. Please refer to Performing an Initial Load of an Oracle Communications Data Model Warehouse section to know how to update DWC_ETL_PARAMETER table. For an incremental load of an Oracle Communications Data Model warehouse, specify the values shown in the following table (that is, the beginning and end date of the ETL period) for OCDM-DWA-MV-DATE process.

| Columns | Value |

|---|---|

FROM_DATE_ETL |

The beginning date of the ETL period. |

TO_DATE_ETL |

The ending date of the ETL period. |

For more information on DWC_ETL_PARAMETER control table, see Oracle Communications Data Model Reference.

Create a session by connecting ocdm_user user through SQLPLUS. An intra-ETL process created in Refreshing Oracle Communications Data Model Derived Tables must be in 'RUNNING' status now:

sqlplus ocdm_user/ocdm_user@SID

Refresh Oracle Communications Data Model aggregate materialized views by executing following commands:

SQL> DECLARE

p_process_no NUMBER;

l_status VARCHAR2(20);

BEGIN

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_ACCT_DEBT_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_ACCT_PYMT_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_ACCT_STTSTC_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_AGRMNT_ACCT_SBRP_PROD',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_ARPU_BASE_CUST_TYP',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_BER_FER_ERR_RATIO_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_CALL_CNTR_CALL_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_CALL_CNTR_CASE_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_CELL_STTSTC_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_CMISN_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_CNT_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_COST_CNTR_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_CUST_ACQSTN_SUMM_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_CUST_CHRN_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_CUST_COST_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_CUST_DEBT_COLLCTN_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_CUST_EQPMNT_INSTLTN_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_CUST_ORDR_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_DATA_USG_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_INVC_ADJ_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_INVC_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_INV_POSN_DEPT_DAY',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_INV_POSN_SBC_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_IN_PLTFRM_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_LYLTY_PROG_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_MKT_SHARE',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_MSC_TRFC_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_NBR_PRT_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_NTWK_AVLBLTY_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_NTWK_TCHPNT_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_PRPD_ALWNCE_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_PRTNR_STLMNT_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_RDMPTN_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_RF_NTWK_CPCTY_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_RVN_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_SBSCBR_STTSTC_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_SL_CMPGN_SUMM_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_SPLMNTR_SRVC_USG_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_STORE_EFFNCY_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_VAS_SBRP_QCK_SUMM_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_VAS_USG_MO',p_process_no);

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_VOI_CALL_MO',p_process_no);

END;

/

SQL> DECLARE

p_process_no NUMBER;

l_status VARCHAR2(20);

BEGIN

l_status := OCDM_SYS.PKG_INTRA_ETL_UTIL.Refresh_MV('DWA_CUST_GROSS_ORDRS_QTR',p_process_no);

END;

/

On a scheduled basis you must update the OLAP cube data with the relational data that has been added to the Oracle Communications Data Model data warehouse since the initial load of the OLAP cubes. Refreshing the OLAP Cubes in an Oracle Communications Data Model is a multi-step process:

Refresh the foundation layer of the Oracle Communications Data Model warehouse (that is, the reference, lookup, and base tables) with operational system data by executing the source-ETL that you have written.

Refresh Oracle Communications Data Model derived tables as explained in Refreshing Oracle Communications Data Model Derived Tables.

Refresh Oracle Communications Data Model aggregate materialized views as explained in Refreshing Oracle Communications Data Model Aggregate Materialized Views.

Update the parameters of the DWC_OLAP_ETL_PARAMETER control table. Please refer to Performing an Initial Load of an Oracle Communications Data Model Warehouse section to know how to update DWC_OLAP_ETL_PARAMETER table.

For more information on DWC_OLAP_ETL_PARAMETER control table, see Oracle Communications Data Model Reference.

Create a session by connecting ocdm_user user through SQLPLUS. An intra-ETL process created in Refreshing Oracle Communications Data Model Derived Tables must be in 'RUNNING' status now:

sqlplus ocdm_user/ocdm_user@SID

Refresh Oracle Communications Data Model OLAP cubes by executing following commands:

SQL> DECLARE

l_build_methd OCDM_SYS.DWC_OLAP_ETL_PARAMETER.BUILD_METHOD%TYPE;

l_cube_nm OCDM_SYS.DWC_OLAP_ETL_PARAMETER.CUBENAME%TYPE;

l_maxjobques OCDM_SYS.DWC_OLAP_ETL_PARAMETER.MAXJOBQUEUES%TYPE;

l_calc_fcst OCDM_SYS.DWC_OLAP_ETL_PARAMETER.CALC_FCST%TYPE;

l_no_fcst_yrs OCDM_SYS.DWC_OLAP_ETL_PARAMETER.NO_FCST_YRS%TYPE;

l_fcst_mthd OCDM_SYS.DWC_OLAP_ETL_PARAMETER.FCST_MTHD%TYPE;

l_fcst_st_yr OCDM_SYS.DWC_OLAP_ETL_PARAMETER.FCST_ST_YR%TYPE;

l_fcst_end_yr OCDM_SYS.DWC_OLAP_ETL_PARAMETER.FCST_END_YR%TYPE;

l_status VARCHAR2(20);

BEGIN

/*************** Fetching the values of the OLAP ETL parameters variable used in this procedure ****************/

SELECT

BUILD_METHOD l_build_methd,

CUBENAME l_cube_nm,

MAXJOBQUEUES l_maxjobques,

CALC_FCST l_calc_fcst,

NO_FCST_YRS l_no_fcst_yrs,

FCST_MTHD l_fcst_mthd,

FCST_ST_YR l_fcst_st_yr,

FCST_END_YR l_fcst_end_yr

INTO

l_build_methd,

l_cube_nm,

l_maxjobques,

l_calc_fcst,

l_no_fcst_yrs,

l_fcst_mthd,

l_fcst_st_yr,

l_fcst_end_yr

FROM

OCDM_SYS.DWC_OLAP_ETL_PARAMETER;

l_status := OCDM_SYS.PKG_OCDM_OLAP_ETL_AW_LOAD.olap_etl_aw_build(l_build_methd,l_cube_nm,l_maxjobques,l_calc_fcst,l_no_fcst_yrs,l_fcst_mthd,l_fcst_st_yr,l_fcst_end_yr,null,null);

END;

/

If there is requirement to refresh only Oracle Communications Data Model OLAP cubes, the same can be achieved with step 6, but before that make sure an intra-ETL process is already running. If no intra-ETL process is running, start one:

sqlplus ocdm_user/ocdm_user@SID SQL> DECLARE l_process_type OCDM_SYS.DWC_INTRA_ETL_PROCESS.PROCESS_TYPE%TYPE; l_error_text OCDM_SYS.DWC_MESSAGE.MESSAGE_TEXT%TYPE; l_process_no NUMBER; BEGIN l_process_no := OCDM_SYS.PKG_INTRA_ETL_UTIL.Start_Process(l_process_type,l_error_text); END; /

Refreshing of data mining models is integrated into intra-ETL workflow. Data mining models get refreshed whenever intra-ETL workflow is executed. Data mining models trained using training data collected based on from_date_etl parameter and scored on apply data collected based on from_date_etl parameter in DWC_ETL_PARAMETER table for BUILD-MINING-MODELS process. You can also refresh all data mining models together or refresh each data mining model individually.

Refreshing the Data Mining Models in an Oracle Communications Data Model is a multi-step process:

Refresh the foundation layer of the Oracle Communications Data Model warehouse (that is, the reference, lookup, and base tables) with operational system data by executing the source-ETL that you have written.

Refresh Oracle Communications Data Model derived tables as explained in "Refreshing Oracle Communications Data Model Derived Tables".

Update the parameters of the DWC_ETL_PARAMETER control table for BUILD-MINING-MODELS process. See "Performing an Initial Load of an Oracle Communications Data Model Warehouse" for information on updating the DWC_ETL_PARAMETER table.

For more information on DWC_ETL_PARAMETER control table, see Oracle Communications Data Model Reference.

Create a session by connecting ocdm_user user through SQLPLUS. An intra-ETL process created in "Refreshing Oracle Communications Data Model Derived Tables" must be in 'RUNNING' status now:

sqlplus ocdm_user/ocdm_user@SID

Refresh Oracle Communications Data Model data mining models by executing following commands:

SQL> DECLARE

l_trnng_day DATE;

l_apply_day DATE;

l_trnng_day_key NUMBER(30);

l_apply_day_key NUMBER(30);

l_status VARCHAR2(500);

BEGIN

SELECT from_date_etl, to_date_etl INTO l_trnng_day, l_apply_day

FROM ocdm_sys.dwc_etl_parameter

WHERE process_name = 'BUILD-MINING-MODELS';

l_trnng_day_key := TO_CHAR(l_trnng_day,'YYYYMMDD');

l_apply_day_key := TO_CHAR(l_apply_day,'YYYYMMDD');

-- Create/refresh mining source views OCDM_SYS.PKG_MINING_ETL.refresh_mining_views(l_trnng_day_key,l_apply_day_key);

-- Build mining models

l_status := OCDM_SYS.PKG_OCDM_MINING.REFRESH_MODEL(l_apply_day_key,NULL);

END;

/

You can also refresh data mining models individually. To refresh Prepaid SVM Churn model, execute the following command (make sure you are connected as ocdm_user user):

SQL> exec ocdm_sys.pkg_ocdm_mining.create_prpd_churn_svm_model(training_day_key);

This topic discusses how you can identify and manage errors during intra-ETL execution. It contains the following topics:

Three ocdm_sys schema control tables, DWC_INTRA_ETL_PROCESS, DWC_INTRA_ETL_ACTIVITY, DWC_OLAP_ACTIVITY monitor the execution of the intra-ETL process. These tables are documented in Oracle Communications Data Model Reference. You can access these three tables from ocdm_user user.

Each normal run (as opposed to an error-recovery run) of a separate intra-ETL execution performs the following steps:

Inserts a record into the DWC_INTRA_ETL_PROCESS table with a monotonically increasing system generated unique process key, SYSDATE as process start time, RUNNING as the process status, and an input date range in the FROM_DATE_ETL and TO_DATE_ETL columns.

Invokes each of the individual intra-ETL programs in the appropriate order of dependency. Before the invocation of each program, the procedure inserts a record into the intra-ETL Activity detail table, DWC_INTRA_ETL_ACTIVITY, with values for:

ACTIVITY_KEY, a system generated unique activity key.

PROCESS_KEY, the process key value corresponding to the intra-ETL process.

ACTIVITY_NAME, an individual program name.

ACTIVITY_DESC, a suitable activity description.

ACTIVITY_START_TIME, the value of SYSDATE.

ACTIVITY_STATUS, the value of RUNNING.

Updates the corresponding record in the DWC_INTRA_ETL_ACTIVITY table for the activity end time and activity status after the completion of each individual ETL program (either successfully or with errors). For successful completion of the activity, the procedure updates the status as 'COMPLETED-SUCCESS'. When an error occurs, the procedure updates the activity status as 'COMPLETED-ERROR', and also updates the corresponding error detail in the ERROR_DTL column.

Updates the record corresponding to the process in the DWC_INTRA_ETL_ PROCESS table for the process end time and status, after the completion of all individual intra-ETL programs. When all the individual programs succeed, the procedure updates the status to 'COMPLETED-SUCCESS'; otherwise it updates the status to 'COMPLETED-ERROR'.

For OLAP cubes loading, a record is inserted into DWC_OLAP_ACTIVITY table with CUBENAME as cube name, status as 'RUNNING', and LOAD_START_DT as SYSDATE for each cube. It updates the record upon the completion of cube loading. It updates STATUS column to 'COMPLETED-SUCCESS' if cube loading is successful, otherwise 'COMPLETE-ERROR' and updates LOAD_END_DT column to SYSDATE. In case of 'COMPLETED-ERROR' cubes, it also updates ERROR_DTL column with error details.

You can monitor the execution state of the intra-ETL, including current process progress, time taken by individual programs, or the complete process, by viewing the contents of the DWC_INTRA_ETL_PROCESS, DWC_INTRA_ETL_ACTIVITY, and DWC_OLAP_ACTIVITY tables. In DWC_INTRA_ETL_ACTIVITY table, see the records of currently running process. Monitoring can be done both during and after the execution of the intra-ETL procedure.

To recover an intra-ETL process

Identify the errors by looking at the corresponding error details that are tracked against the individual programs in the DWC_INTRA_ETL_ACTIVITY table.

Identify errors of OLAP cubes loading for individual cubes in DWC_OLAP_ACTIVITY table.

Correct the causes of the errors.

Re-invoke the intra-ETL process.

The intra-ETL workflow process identifies whether it is a normal run or recovery run by referring the DWC_INTRA_ETL_ACTIVITY table. During a recovery run, the intra-ETL workflow executes only the necessary programs. For example, for a derived population error as a part of the previous run, this recovery run executes the individual derived population programs which produced errors in the previous run. After their successful completion, the run refreshes aggregate materialized views in the appropriate order.

In this way, the intra-ETL error recovery is almost transparent, without involving the data warehouse or ETL administrator. The administrator must only correct the causes of the errors and re-invoke the intra-ETL process. The intra-ETL process identifies and executes the programs that generated errors.