| Oracle® Communications Data Model Implementation and Operations Guide Release 11.3.2 E28442-05 |

|

|

PDF · Mobi · ePub |

| Oracle® Communications Data Model Implementation and Operations Guide Release 11.3.2 E28442-05 |

|

|

PDF · Mobi · ePub |

This chapter provides information about customizing the access layer of Oracle Communications Data Model. It includes the following topics:

Introduction to Customizing the Access Layer of Oracle Communications Data Model

Dimensional Components in the Oracle Communications Data Model

The access layer of Oracle Communications Data Model provides the calculated and summarized ("flattened") perspectives of the data needed by business intelligence tools. Access layer objects are populated using the data from the foundation layer 3NF objects.

The access layer objects in the ocdm_sys schema include: derived and aggregate tables and OLAP cube views. This layer also contains data mining models. The results of these models are stored in derived tables. The models themselves are also defined in the ocdm_sys schema.

When designing and customizing access layer objects:

Follow the general guidelines for customizing physical objects given in "General Recommendations When Designing Physical Structures".

Design access layer objects to support business intelligence reports and queries that your business end-users require. See Chapter 5, "Report and Query Customization."

The following topics provide specialized information about designing and customizing access layer objects:

Derived tables have a DWD_ prefix. Derived tables are tables where one of the following apply:

Have as values the result of a non-aggregate calculation against the data in the foundation layer tables.

Have some minimal level of aggregation, typically at the day level (for example, DWD_CNT_DAY).

Summarize, at day or month level, all activities over the period of specific processes, split in various attributes (for example, DWD_ACCT_BAL_MO).

Some derived tables leverage, and hence are dependent on, other derived tables (for example DWD_CUST_MNNG).

Depending on the type of derived table you customize derived tables as follows:

Tables that hold the results of a calculation such as the DWD_BER_FER_ERR_RATIO_DAY table that contains values that are the daily BER (Bit Error Rate) and FER (Frame Error Rate) statistics about the network elements. For information on customizing these tables, see "Creating New Derived Tables for Calculated Data".

Result tables for the data mining models (for example, DWD_CUST_MNNG). For information on customizing data mining models, see "Customizing Oracle Communications Data Model Data Mining Models".

See:

The Derived Tables topic in Oracle Communications Data Model Reference for a list of all of the derived tables in the default Oracle Communications Data Model. For a list of only those derived tables that are results tables for the data mining models, see the chapter on Data Mining Models in Oracle Communications Data Model Reference.If, during fit-gap analysis, you identified a need for calculated data that is not provided by the default derived tables, you can meet this need by defining new tables or, alternatively, by adding missing dimensions and measures to existing derived tables. When designing these tables, name the tables following the convention of using the CWD_ prefix (for Customized Warehouse Derived) or DWD_ (for Data Warehouse Derived). Make sure all the main dimensions are put first and have Foreign Keys to their corresponding reference or lookup tables. Attributes that add information only, avoiding costly joins, should not be part of the Primary Key of the derived table. Some dimensions that are part of a hierarchy do not necessarily need to be part of the Primary Key. All measures should be put afterward, grouped if possible by similar meaning. Make sure that all monetary measures should have the LOCAL and REPORTING amount associated, and if possible the currency (as required in the TM Forum SID).

See:

Oracle Communications Data Model Reference for details on dimensions that are part of a hierarchy.Some derived (DWD_) tables in the default ocdm_sys schema are the results of data mining models defined in the default Oracle Communications Data Model. Those models are defined in the default ocdm_sys schema that also comes with Oracle Communications Data Model.

Oracle Communications Data Model data mining models get source data from views defined on two derived tables (DWD_) and a base table (DWB_). These tables are:

DWD_CUST_DNA

DWD_VAS_SBRP_QCK_SUMM

DWB_EVT_PRTY_INTRACN

Derived tables store data mining models prediction results and model rules, in (DWD_), and use reference tables (DWR_), and look up tables (DWL_). These tables are:

DWD_CUST_PROD_AFFLTN

DWD_CUST_DNA

DWR_CUST_DT_NODE

DWD_CHRN_SVM_FACTOR

DWD_CHRN_SVM_ROC

DWD_PROMO_SVM_FACTOR

DWD_PROMO_SVM_ROC

DWR_CUST_SGMNT

DWR_CUST_SGMNT_DTL

DWL_MNNG_LTV_BAND

DWL_MNNG_LT_SRVVL_BAND

When Oracle Communications Data Model is installed the installer copies the mining source scripts to $ORACLE_HOME/ocdm/pdm/mining/src.

Table 3-1 lists the mining scripts.

Table 3-1 Oracle Communications Data Model Mining Scripts

| Script Name | Description |

|---|---|

|

|

Predefined dictionary for customer comment scoring. |

|

|

Initializes mining environment and executes the other three mining scripts. |

|

|

Defines views, which have training/apply data, on source tables. |

|

|

Core mining package that has a procedure for each model. Each procedure drops, creates mining model and scores mining model. |

When you create a customized Oracle Communications Data Model warehouse, you can customize the data mining models in the following ways:

Create a model as discussed in "Creating a New Data Mining Model for Oracle Communications Data Model".

Modify an existing model as discussed in "Modifying Oracle Communications Data Model Data Mining Models".

To create a data mining model:

Define the problem and identify input attributes. Also identify target attribute if the mining problem is supervised.

Check if the existing mining source views defined in pkg_mining_etl.sql script support the requirement of your problem. Modify the definition of views to support your requirement. Do not remove any columns from view definition unless you are sure that those columns do not make any sense.

If the existing mining source views do not support required fields, create a new table or view to support your requirements. Add the new table to pkg_mining_etl.sql PL/SQL package. Follow the naming conventions outlined in "Conventions When Customizing the Physical Model" and use a DWD_ prefix for results tables. Modify the intra-ETL programs to support your mining problem requirements.

For each mining problem that Oracle Data Mining supports, there is more than one algorithm. Create a setting table for your mining problem and follow the naming convention. The prefix for a setting table is "DM_". Add the definition of new setting table to ocdm_mining_init.sql script.

Add a procedure for your mining problem to pkg_ocdm_mining PL/SQL package. This procedure should create mining model and score the trained mining model on apply data. Compile the package. To create the mining model for your problem, invoke the newly added procedure. Make sure your new procedure works according to your expectations. Check user_mining_models data dictionary view for trained model. There are few more data dictionary views that give more information on the trained models. For more details, refer to Oracle Data Mining Concepts.

To customize Oracle Communications Data Model mining models, take the following steps:

Change the definition of source views used as input to the mining model.

If required, change the definition of source derived table, DWD_CUST_DNA. Do not remove any existing columns. Only add new columns with NULL enable.

Modify the intra-ETL package of DWD_CUST_DNA table. Execute the intra-ETL package to load data into DWD_CUST_DNA table.

Refresh mining views by executing following statement. You need to pass training day and apply day key:

SQL> exec PKG_MINING_ETL.refresh_mining_views(l_trnng_day_key,l_apply_day_key);

Train the model again by calling Oracle Communications Data Model mining package.

Ensure that the model reflects the new definition (for example, that a new column has been added).

To add a new column to create_prpd_churn_svm_model, perform the following steps:

Add the new column to views that are used in create_prpd_churn_svm_model.

DWV_PRPD_CUST_CHRN_SRC

DWV_PRPD_CUST_CHRN_TST

DWV_PRPD_CUST_CHRN_APPLY

Train the model by issuing the following statement:

pkg_ocdm_mining.create_prpd_churn_svm_model(training_day_key);

Execute the following statement to query model details table and ensure the new column name is included in the query result:

SQL> SELECT attribute_name

FROM TABLE(SELECT ATTRIBUTE_SET FROM

TABLE(DBMS_DATA_MINING.GET_MODEL_DETAILS_SVM('OCDM_PRPD_CHURN_SVM'))

WHERE CLASS=1);

After you have populated Oracle Communications Data Model foundation layer and the derived tables, you can leverage the prebuilt Oracle Communications Data Model mining model for some more advanced analysis and predictions.

This tutorial shows you how to predict the prepaid customers who will terminate the service in next three months (churners) based on the populated Oracle Communications Data Model warehouse. Using prebuilt Oracle Communications Data Model Mining models, you can easily and very quickly see the prediction result of your customers, without having to go through all of the data preparation, training, testing and applying process that you must perform in a traditional from-scratch mining project.

See:

Oracle Data Mining Concepts for more information about the Oracle Database mining training and scoring (applying) process.After the initially generated mining model, as time goes by, the customer information and their behavior change. Consequently, you must refresh the previous trained mining models based on the latest customer and usage data. You can follow the process in this tutorial to refresh the mining models to acquire predictions on latest customer information.

This tutorial shows you how to generate the Churn Prediction model through Oracle Communications Data Model Mining APIs. To use different parameters in the training process, or customize the model in more advanced fashion, you can also use Oracle Data Miner to do the same work.

This tutorial consists of the following:

Before starting this tutorial:

Review the Oracle by Example (OBE) tutorial "Using Oracle Data Miner 11g Release 2." To access this tutorial, open the Oracle Learning Library in your browser by following the instructions in "Oracle Technology Network"; and, then, search for the tutorial by name.

Install Oracle Communications Data Model.

Populate the base, reference, and lookup tables.

Execute the intra-ETL.

Ensure that the following tables contain valid data:

DWB_ACCT_STAT_HIST |

DWB_BRDBND_USG_EVT |

DWB_FIXED_LN_CALL_EVT |

DWB_ISP_USG_EVT |

DWB_MMS_EVT |

DWB_NP_RQST_HDR |

DWB_SMS_EVT |

DWB_WRLS_CALL_EVT |

DWB_WRLS_CNTNT_DNLDG_EVT |

DWD_ACCT_DEBT_MO |

DWD_ACCT_PYMT_DAY |

DWD_CNTCT_CNTR_DAY |

DWD_CUST_DNA |

DWD_PRPD_ACCT_STTSTC_DAY |

DWL_AGE_BND |

DWL_AGE_ON_NET_BND |

DWL_DEBT_AGNG_BND |

DWR_ACCT |

DWR_ADDR_LOC |

DWR_AGRMNT |

DWR_CUST |

DWR_BSNS_MO |

DWR_CUST_SCR |

DWR_DEMOG_ATRIB |

DWR_EXTRNL_OPRTR |

DWR_HH |

DWR_INDVL_DEMOG_VAL |

DWR_JB |

DWR_PROD_SBRP |

DWR_SOC_JB |

Note:

If you have not populated the real customer data and you only want to learn the Oracle Communications Data Model mining model, you can use the sample data by taking the following steps:Ensure that during the install you generated the calendar data covering the range of 2011 through 2012. For example, by setting the parameters of starting from "20070101" for 10 years satisfy this condition.

Download the sample data (ocdm_for_mining.dmp.zip):

http://download.oracle.com/technetwork/database/options/comm-data-model/ocdm_for_mining.dmp.zip

Then unzip the sample data and import that data into your new ocdm_sys schema using the following command:

imp system/<password> file=ocdm_for_mining.dmp fromuser=ocdm_sample touser=ocdm_sys ignore=y log=mining_dump_import.log

This tutorial requires a valid, populated Oracle Communications Data Model warehouse.

Oracle by Example:

For more information about using SQL Developer, refer to tutorial "Getting Started with Oracle SQL Developer 3.0". To access this tutorial, open the Oracle Learning Library in your browser by following the instructions in "Oracle Technology Network"; and, then, search for the tutorial by name.To prepare the environment, take the following steps:

In SQL Developer, connect to the ocdm_sys schema.

Tip:

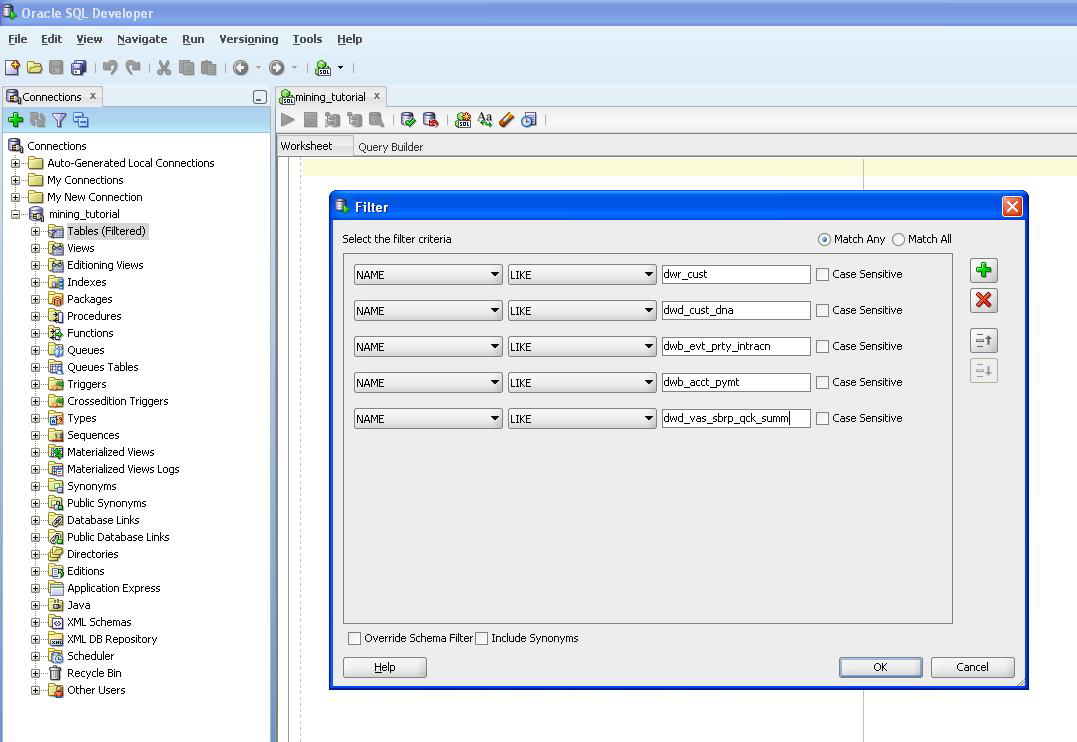

SQL Developer can be found on any Oracle Database Installation under$ORACLE_HOME/sqldeveloper.After you connect to the ocdm_sys schema, you can see all the tables in that schema. You can narrow down the list by right clicking the "table" and then applying filters:

(Optional) As mentioned in the "Preparing Your Environment"", if you have not populated tables with your data you can try using sample data. After you download the sample data, follow these steps to import the sample data:

Connect to sqlplus as sysdba:

sqlplus / as sysdba

Execute following commands to generate disable constraint script:

spool disable_constraints.sql SET PAGESIZE 15000 SET LINESIZE 1000 conn ocdm_sys/ocdm_sys SELECT 'ALTER TABLE ' || table_name || ' disable constraint ' || constraint_name || ';' FROM user_constraints WHERE STATUS='ENABLED' AND constraint_type = 'R'; SELECT 'ALTER TABLE ' || table_name || ' disable constraint ' || constraint_name || ';' FROM user_constraints WHERE STATUS='ENABLED' AND constraint_type = 'U'; SELECT 'ALTER TABLE ' || table_name || ' disable constraint ' || constraint_name || ';' FROM user_constraints WHERE STATUS='ENABLED' AND constraint_type = 'C'; SELECT 'ALTER TABLE ' || table_name || ' disable constraint ' || constraint_name || ';' FROM user_constraints WHERE STATUS='ENABLED' AND constraint_type = 'P'; spool off

Execute disable_constraints.sql script as ocdm_sys user:

sqlplus ocdm_sys/ocdm_sys @disable_constraints.sql

Import the sample dump into ocdm_sys schema by executing the following commands:

imp system/<password> file=ocdm_for_mining.dmp fromuser=ocdm_sample touser=ocdm_sys ignore=y log=mining_dump_import.log

Execute the following commands to generate enable constraint script:

spool enable_constraints.sql SET PAGESIZE 15000 SET LINESIZE 1000 conn ocdm_sys/ocdm_sys SELECT 'ALTER TABLE ' || table_name || ' enable constraint ' || constraint_name || ';' FROM user_constraints WHERE STATUS='DISABLED' AND constraint_type = 'R'; SELECT 'ALTER TABLE ' || table_name || ' enable constraint ' || constraint_name || ';' FROM user_constraints WHERE STATUS='DISABLED' AND constraint_type = 'U'; SELECT 'ALTER TABLE ' || table_name || ' enable constraint ' || constraint_name || ';' FROM user_constraints WHERE STATUS='DISABLED' AND constraint_type = 'C'; SELECT 'ALTER TABLE ' || table_name || ' enable constraint ' || constraint_name || ';' FROM user_constraints WHERE STATUS='DISABLED' AND constraint_type = 'P'; spool off

Execute enable_constraints.sql script as ocdm_sys user:

sqlplus ocdm_sys/ocdm_sys @enable_constraints.sql

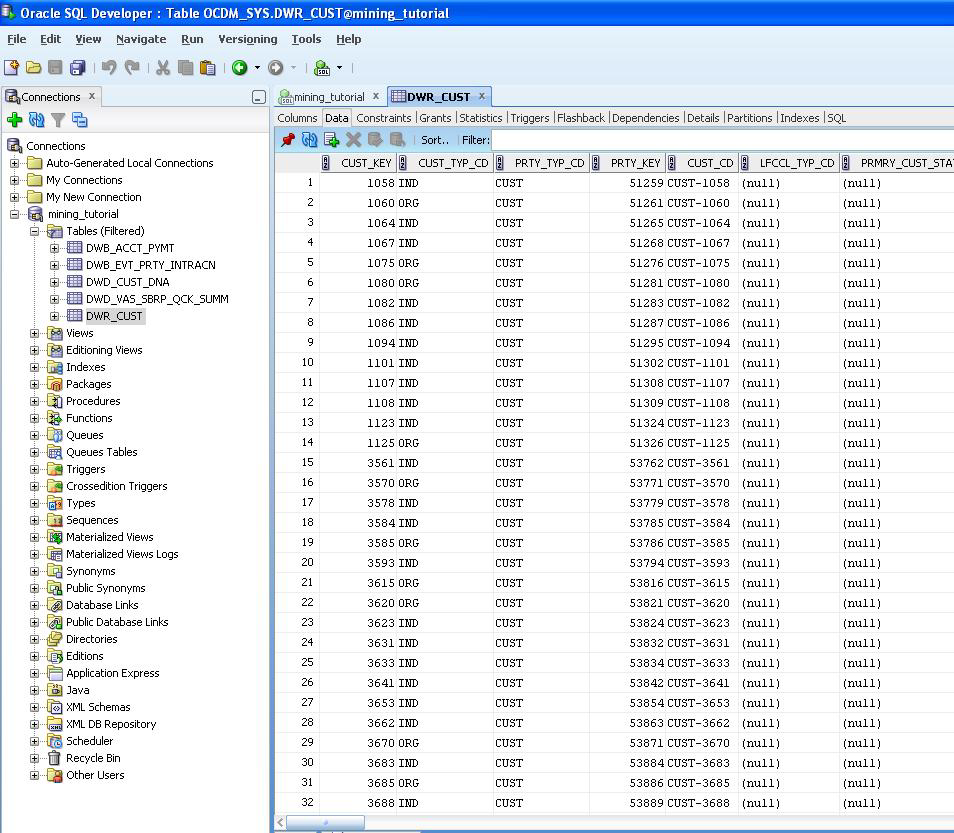

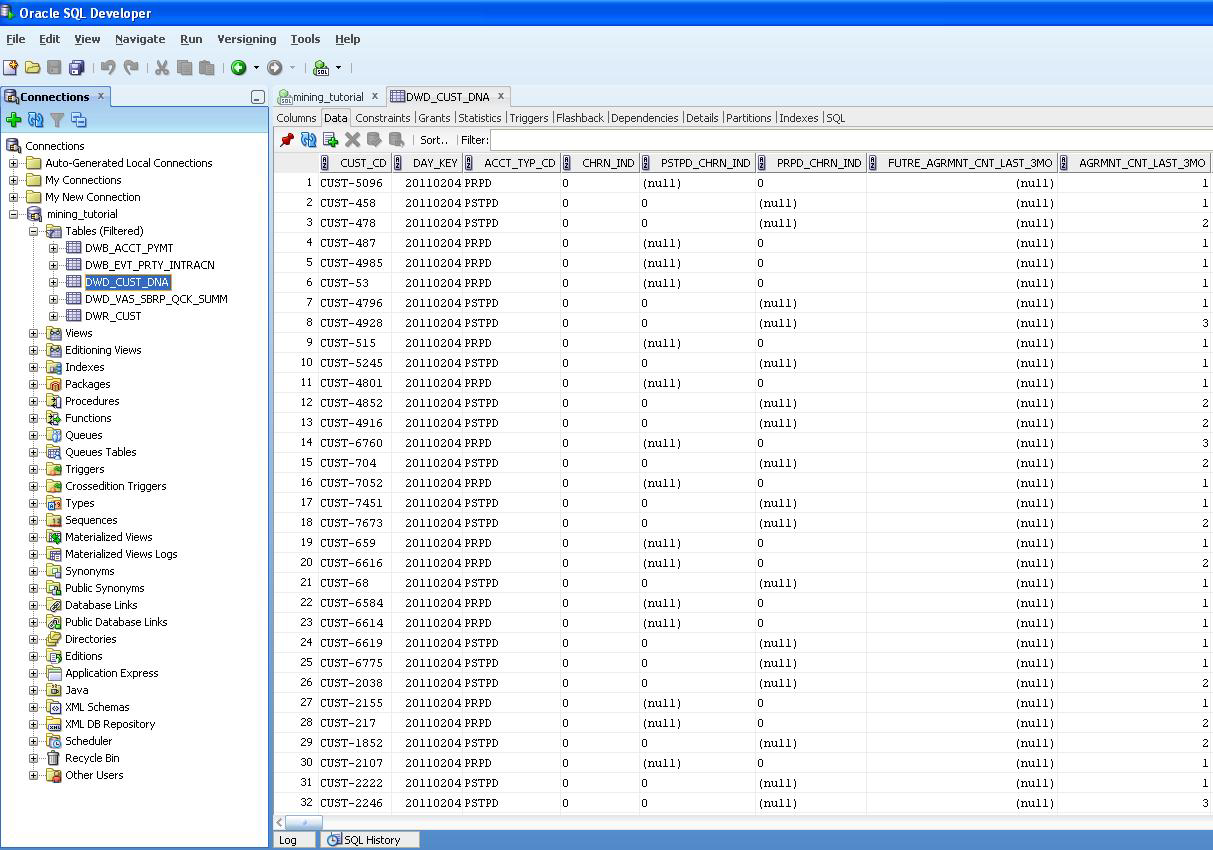

Review tables to ensure they contain valid data, either from your own customer data, or from the sample data:

Review the DWD_CUST_DNA table:

Select and check each of the following tables to ensure that the table is properly populated:

DWR_CUST

DWB_EVT_PRTY_INTRACN

DWB_ACCT_PYMT

DWD_CUST_DNA

DWD_VAS_SBRP_QCK_SUMM

For generating prepaid churn prediction model, refresh the mining views defined on mining source tables DWD_CUST_DNA and DWD_VAS_SBRP_QCK_SUMM.

Refresh mining views by passing training day key and apply day key. If you are using mining sample dump provided, then pass 20110201 as training day key and 20110301 as apply day key:

sqlplus ocdm_sys/ocdm_sys SQL> exec PKG_MINING_ETL.refresh_mining_views(l_trnng_day_key,l_apply_day_key);

Check user_mining_models data dictionary view for mining models. It should return no data if you have not previously trained any models:

SQL> SELECT * FROM user_mining_models;

Train the prepaid churn prediction model by executing following:

sqlplus ocdm_sys/ocdm_sys SQL> exec PKG_OCDM_MINING.create_prpd_churn_svm_model (l_trnng_day_key);

Check user_mining_models data dictionary view for trained prepaid churn prediction mining model:

SQL> SELECT * FROM user_mining_models;

Check DWD_CHRN_SVM_FACTOR table for prepaid churn SVM factors:

SQL> SELECT * FROM DWD_CHRN_SVM_FACTOR WHERE ACCT_TYP_CD = 'PRPD';

Note:

This tutorial does not refresh all models. It only refreshes one prepaid churn prediction model. To refresh all of the default mining models based on latest customer data, follow the instructions in "Refreshing the Data in an Oracle Communications Data Model Warehouse".After you refresh, train, and generate the data mining model, check the DWD_CUST_DNA results table in ocdm_sys schema as follows:

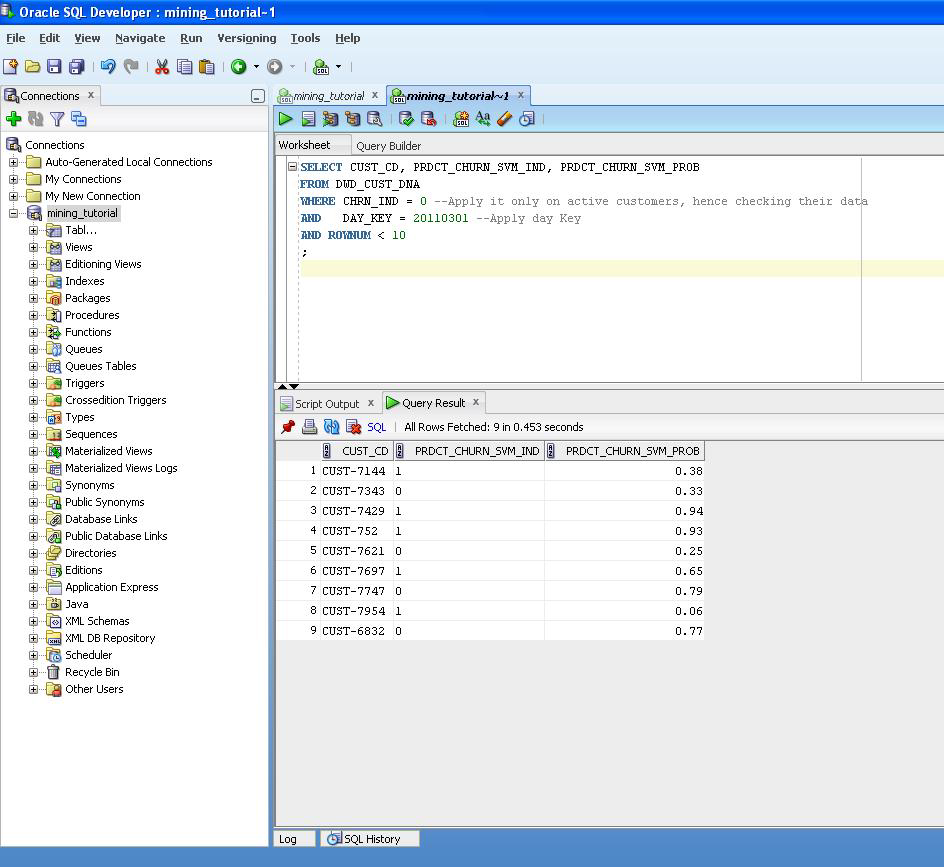

Issue the following query.

SQL> SELECT CUST_CD, PRDCT_CHURN_SVM_IND, PRDCT_CHURN_SVM_PROB FROM DWD_CUST_DNA WHERE CHRN_IND = 0 --Apply it only on active customers, hence checking their data AND DAY_KEY = 20110301 --Apply day Key AND ROWNUM < 10 ;

This provides results:

For each customer identified by CUST_CD, the PRDCT_CHRN_SVM_IND column gives a Boolean prediction of whether a customer will churn in next three months. Zero (0) stands for non-churner, while one (1) stands for churner. The PRDCT_CHURN_SVM_PROB column provides a more detailed probability (0~1) that specifies how likely a customer is going to churn.

(Optional) If you have also installed the Oracle Communications Data Model sample reports in Oracle Business Intelligence Suite Enterprise Edition 11g, you can also view the results as an Oracle Business Intelligence Suite Enterprise Edition report.

See:

Oracle Communications Data Model Installation Guide for more information on installing the sample reports and deploying the Oracle Communications Data Model RPD and webcat on the Business Intelligence Suite Enterprise Edition instance.Aggregate tables are tables that aggregate or "roll up" the data to one level higher than a base or derived table (and other functions can also be in the aggregate tables such as average, count, min, max, and others). The aggregate tables in the default Oracle Communications Data Model are actually materialized views and have a DWA_ prefix. These aggregate tables provide a view of the data similar to the view provided by a fact table in a snowflake schema.

The default Oracle Communications Data Model defines several aggregate tables. For example, the DWA_BER_FER_ERR_RATIO_MONTH table aggregates the values of the DWD_BER_FER_ERR_RATIO_DAY table to the month level.

See:

The "Aggregate Tables" topic in Oracle Communications Data Model Reference for a list of the aggregate tables in the default Oracle Communications Data Model.If, during fit-gap analysis, you identified a need for simple aggregated data that is not provided by the default aggregate tables, you can define new materialized views. When designing these tables, keep the following points in mind:

Create a query for the materialized view that aggregates up only a single level. For example, if aggregating over time, then aggregate only from day to month.

Note:

When you must aggregate up many levels (for example in time, month, quarter, and year) or different hierarchies (for example, the fiscal and calendar hierarchies for a time dimension), do not define aDWA_ table; instead, define the aggregations by creating OLAP cubes.Name the tables following the conventions outlined in "General Naming Conventions for Physical Objects" and use a DWA_ prefix.

There is often much discussion regarding the 'best' modeling approach to take for any given data warehouse with each style, classic 3NF and dimensional having their own strengths and weaknesses. It is likely that data warehouses must do more to embrace the benefits of each model type rather than rely on just one - this is the approach that was adopted in designing the Oracle Communications Data Model. The foundation layer of the Oracle Communications Data Model is a 3NF model. The default Oracle Communications Data Model also provides a dimensional model of the data. This dimensional model of the data is a perspective that summarizes and aggregates data, rather than preserving detailed transaction information.

Familiarize yourself with dimensional modeling by reading the following topics before you begin to customize the dimensional model of the default Oracle Communications Data Model:

Characteristics of the OLAP Cubes in Oracle Communications Data Model

Defining New Oracle OLAP Cubes for Oracle Communications Data Model

Changing an Oracle OLAP Cube in Oracle Communications Data Model

Creating a Forecast Cube for Oracle Communications Data Model

Choosing a Cube Partitioning Strategy for Oracle Communications Data Model

Choosing a Cube Data Maintenance Method for Oracle Communications Data Model

The simplicity of a dimensional model is inherent because it defines objects that represent real-world business entities. Analysts know which business measures they are interested in examining, which dimensions and attributes make the data meaningful, and how the dimensions of their business are organized into levels and hierarchies.

In the simplest terms, a dimensional model identifies the following objects:

Measures. Measures store quantifiable business data (such as sales, expenses, and inventory). Measures are also called "facts". Measures are organized by one or more dimensions and may be stored or calculated at query time:

Stored Measures. Stored measures are loaded and stored at the leaf level. Commonly, there is also a percentage of summary data that is stored. Summary data that is not stored is dynamically aggregated when queried.

Calculated Measures. Calculated measures are measures whose values are calculated dynamically at query time. Only the calculation rules are stored in the database. Common calculations include measures such as ratios, differences, totals and moving averages. Calculations do not require disk storage space, and they do not extend the processing time required for data maintenance.

Dimensions. A dimension is a structure that categorizes data to enable users to answer business questions. Commonly used dimensions are Customers, Products, and Time. A dimension's structure is organized hierarchically based on parent-child relationships. These relationships enable:

Navigation between levels.

Hierarchies on dimensions enable drilling down to lower levels or navigation to higher levels (rolling up). Drilling down on the Time dimension member 2012 typically navigates you to the quarters Q1 2012 through Q4 2012. In a calendar year hierarchy for 2012, drilling down on Q1 2012 would navigate you to the months, January 12 through March 12. These kinds of relationships make it easy for users to navigate through large volumes of multidimensional data.

Aggregation from child values to parent values.

The parent represents the aggregation of its children. Data values at lower levels aggregate into data values at higher levels. Dimensions are structured hierarchically so that data at different levels of aggregation are manipulated efficiently for analysis and display.

Allocation from parent values to child values.

The reverse of aggregation is allocation and is heavily used by planning budgeting, and similar applications. Here, the role of the hierarchy is to identify the children and descendants of particular dimension members of "top-down" allocation of budgets (among other uses).

Grouping of members for calculations.

Share and index calculations take advantage of hierarchical relationships (for example, the percentage of total profit contributed by each product, or the percentage share of product revenue for a certain category, or costs as a percentage of the geographical region for a retail location).

A dimension object helps to organize and group dimensional information into hierarchies. This represents natural 1:n relationships between columns or column groups (the levels of a hierarchy) that cannot be represented with constraint conditions. Going up a level in the hierarchy is called rolling up the data and going down a level in the hierarchy is called drilling down the data.

There are two ways that you can implement a dimensional model:

Relational tables in a star schema configuration. This traditional method of implementing a dimensional model is discussed in "Characteristics of Relational Star and Snowflake Tables".

Oracle OLAP Cubes. The physical model provided with Oracle Communications Data Model provides a dimensional perspective of the data using Oracle OLAP cubes. This dimensional model is discussed in "Characteristics of the OLAP Dimensional Model".

In the case of relational tables, the dimensional model has historically been implemented as a star or snowflake schema. Dimension tables (which contain information about hierarchies, levels, and attributes) join to one or more fact tables. Fact tables are the large tables that store quantifiable business measurements (such as sales, expenses, and inventory) and typically have foreign keys to the dimension tables. Dimension tables, also known as lookup or reference tables, contain the relatively static or descriptive data in the data warehouse.

A star schema is a relational schema whose design represents a multidimensional data model. The star schema consists of one or more fact tables and one or more dimension tables that are related through foreign keys. This allows drill paths, hierarchy and query profile to be embedded in the data model itself rather than the data. This in part at least, is what makes navigation of the model so straightforward for end users. Star schemas usually have a large fact table surrounded by smaller dimension tables. Dimension tables do not change very much. Most of the information that the users need are in the fact tables. Therefore, star schemas have fewer table joins than do 3NF models.

A star schema is so called because the diagram resembles a star, with points radiating from a center. The center of the star consists of one or more fact tables and the points of the star are the dimension tables.

Snowflake schemas are slight variants of a simple star schema where the dimension tables are further normalized and broken down into multiple tables. The snowflake aspect only affects the dimensions and not the fact table and is therefore considered conceptually equivalent to star schemas. Snowflake dimensions are useful and indeed necessary when there are fact tables of differing granularity. A month-level derived or aggregate table (or materialized view) must be associated with a month level snowflake dimension table rather than the default (lower) Day level star dimension table.

When a relational table acts as a dimension to a fact table, it is recommended that you declare that table as a dimension (even though it is not necessary). Defined dimensions can yield significant performance benefits, and support the use of more complex types of rewrite.

To define and declare the structure of the dimension use the CREATE DIMENSION command. Use the LEVEL clause to identify the names of the dimension levels.

To improve the data quality of the dimension data in the data warehouse, it is recommended that you validate the declarative information about the relationships between the dimension members after any modification to the dimension data.

To perform this validation, use the VALIDATE_DIMENSION procedure of the DBMS_DIMENSION package. When the VALIDATE_DIMENSION procedure encounters any errors, the procedure places the errors into the DIMENSION_EXCEPTIONS table. To find the exceptions identified by the VALIDATE_DIMENSION procedure, query the DIMENSION_EXCEPTIONS table.

You can schedule a call to the VALIDATE_DIMENSION procedure as a post-process step to the regular Incremental Dimension load script. This can be done before the call to refresh the derived or aggregate tables of the data model through materialized view refresh, intra-ETL package calls.

Oracle OLAP Cubes logically represent data similar to relational star tables, although the data is actually stored in multidimensional arrays. Like dimension tables, cube dimensions organize members into hierarchies, levels, and attributes. The cube stores the measure (fact) data. The dimensions form the edges of the cube.

Oracle OLAP is an OLAP server embedded in the Oracle Database. Oracle OLAP provides native multidimensional storage and speed-of-thought response times when analyzing data across multiple dimensions. The database provides rich support for analytics such as time series calculations, forecasting, advanced aggregation with additive and nonadditive operators, and allocation operations.

By integrating multidimensional objects and analytics into the database, Oracle Database provides the best of both worlds: the power of multidimensional analysis along with the reliability, availability, security, and scalability of the Oracle database.

Oracle OLAP is fully integrated into Oracle Database. At a technical level, this means:

The OLAP engine runs within the kernel of Oracle Database.

Dimensional objects are stored in Oracle Database in their native multidimensional format.

Cubes and other dimensional objects are first class data objects represented in the Oracle data dictionary.

Data security is administered in the standard way, by granting and revoking privileges to Oracle Database users and roles.

OLAP cubes, dimensions, and hierarchies are exposed to applications as relational views. Consequently, applications can query OLAP objects using SQL as described in "Oracle OLAP Cube Views" and Chapter 5, "Report and Query Customization."

Oracle OLAP cubes can be enhanced so that they are materialized views as described in "Cube Materialized Views".

See also:

Oracle OLAP User's Guide and"Characteristics of the OLAP Cubes in Oracle Communications Data Model".The benefits of using Oracle OLAP are significant; Oracle OLAP offers the power of simplicity and provides: One database, standard administration and security, standard interfaces and development tools.

The Oracle OLAP dimensional data model is highly structured. Structure implies rules that govern the relationships among the data and control how the data can be queried. Cubes are the physical implementation of the dimensional model, and thus are highly optimized for dimensional queries. The OLAP engine leverages this innate dimensionality in performing highly efficient cross-cube joins for inter-row calculations, outer joins for time series analysis, and indexing. Dimensions are pre-joined to the measures. The technology that underlies cubes is based on an indexed multidimensional array model, which provides direct cell access.

The OLAP engine manipulates dimensional objects in the same way that the SQL engine manipulates relational objects. However, because the OLAP engine is optimized to calculate analytic functions, and dimensional objects are optimized for analysis, analytic and row functions can be calculated much faster in OLAP than in SQL.

The dimensional model enables Oracle OLAP to support high-end business intelligence tools and applications such as OracleBI Discoverer Plus OLAP, OracleBI Spreadsheet Add-In, Oracle Business Intelligence Suite Enterprise Edition, BusinessObjects Enterprise, and Cognos ReportNet.

Oracle OLAP Dimensional Objects

Oracle OLAP dimensional objects include cubes, measures, dimensions, hierarchies, levels and attributes. The OLAP dimensional objects are described in detail in Oracle OLAP User's Guide. Figure 3-2 shows the general relationships among the objects.

Figure 3-2 Diagram of the OLAP Dimensional Model

When you define an OLAP cube, Oracle OLAP automatically generates a set of relational views on the cube and its dimensions and hierarchies

Cube view. Each cube has a cube view that presents the data for all the measures and calculated measures in the cube. You can use a cube view like a fact table in a star or snowflake schema. However, the cube view contains all the summary data in addition to the detail level data. The default name of a cube view is cube_VIEW.

Dimension and hierarchy views. Each dimension has one dimension view plus a hierarchy view for each hierarchy associated with the dimension. The default name for a dimension view is dimension_VIEW. For a hierarchy view, the default name is dimension_hierarchy_VIEW.

These views are related in the same way as fact and dimension tables in a star schema. Cube views serve the same function as fact tables, and hierarchy views and dimension views serve the same function as dimension tables. Typical queries join a cube view with either a hierarchy view or a dimension view.

SQL applications query these views to display the information-rich contents of these objects to analysts and decision makers. You can also create custom views that follow the structure expected by your applications, using the system-generated views like base tables.

See also:

The discussion on querying dimensional objects in Oracle OLAP User's Guide and Chapter 5, "Report and Query Customization."Oracle OLAP cubes can be enhanced so that they are materialized views. A cube that has been enhanced in this way is called a cube materialized view and has a CB$ prefix. Cube materialized views can be incrementally refreshed through the Oracle Database materialized view subsystem, and they can serve as targets for transparent rewrite of queries against the source tables.

The OLAP dimensions associated with a cube materialized view are also defined with materialized view capabilities.

Necessary Cube Characteristics for Cube Materialized Views

A cube must conform to the following requirements, before it can be designated as a cube materialized view:

All dimensions of the cube have at least one level and one level-based hierarchy. Ragged and skip-level hierarchies are not supported. The dimensions must be mapped.

All dimensions of the cube use the same aggregation operator, which is either SUM, MIN, or MAX.

The cube has one or more dimensions and one or more measures.

The cube is fully defined and mapped. For example, if the cube has five measures, then all five are mapped to the source tables.

The data type of the cube is NUMBER, VARCHAR2, NVARCHAR2, or DATE.

The source detail tables support dimension and rely constraints. If they have not been defined, then use the Relational Schema Advisor to generate a script that defines them on the detail tables.

The cube is compressed.

The cube can be enriched with calculated measures, but it cannot support more advanced analytics in a cube script.

Adding Materialized View Capabilities

To add materialized view capabilities to an OLAP cube, take the following steps:

In the Analytic Workspace Manager, connect to the ocdm_sys schema.

From the cube list, select the cube which you want to enable.

In the right pane, select the Materialized Views tab.

Select Enable Materialized View Refresh of the Cube. then click Apply.

Note:

You cannot enable the cube materialized view for a forecast cube.Oracle by Example:

For more information on working with OLAP cubes, see the following OBE tutorials:"Querying OLAP 11g Cubes"

"Using Oracle OLAP 11g With Oracle BI Enterprise Edition"

To access the tutorials, open the Oracle Learning Library in your browser by following the instructions in "Oracle Technology Network"; and, then, search for the tutorials by name.

See also:

Oracle OLAP User's GuideThe default access layer of Oracle Communications Data Model provides a dimensional perspective of the data using Oracle OLAP cubes.

There are OLAP cubes defined in the default ocdm_sys schema. These cubes have the general characteristics described in "Characteristics of the OLAP Dimensional Model". Specifically, OLAP cubes in the Oracle Communications Data Model have the following characteristics:

All of the default OLAP cubes are loaded with data from DWA_ tables that are materialized views.

The cubes were defined and built using the Analytical Workspace Manager (AWM) client tool.

A relational view (with a _VIEW suffix) is defined over each of the OLAP cubes.

All of the OLAP cubes in the Oracle Communications Data Model are cube materialized views (that is, CB$ objects).

Note:

Immediately after installation, all materialized views underlying the OLAP cubes are disabled by default. To enable the cube materialized views, you must follow the steps outlined in "Adding Materialized View Capabilities".For information on the using OLAP cubes in your customized version of Oracle Communications Data Model, see Oracle OLAP User's Guide and the following topics:

Defining New Oracle OLAP Cubes for Oracle Communications Data Model

Changing an Oracle OLAP Cube in Oracle Communications Data Model

Creating a Forecast Cube for Oracle Communications Data Model

Choosing a Cube Partitioning Strategy for Oracle Communications Data Model

Choosing a Cube Data Maintenance Method for Oracle Communications Data Model

You can add new OLAP cubes to the ocdm_sys schema. For consistency's sake, design and define these new cubes as described in "Characteristics of the OLAP Cubes in Oracle Communications Data Model".

Take the following steps to define new cubes:

Ensure that there is an aggregate table (DWA_) to use as the "lowest leaf" data for the cube. See "Aggregate Tables in the Oracle Communications Data Model" for information on creating new tables.

Use the AWM to define new Cubes for a customized version of Oracle Communications Data Model. Follow the instructions given for creating cubes and dimensions in Oracle OLAP User's Guide.

Use the information provided in "Characteristics of the OLAP Dimensional Model". and the Oracle OLAP User's Guide to guide you when you design and define new OLAP cubes. Also, if you are familiar with a relational star schema design as outlined in "Characteristics of Relational Star and Snowflake Tables", then you can use this understanding to help you design an OLAP Cube:

Fact tables correspond to cubes.

Data columns in the fact tables correspond to measures.

Foreign key constraints in the fact tables identify the dimension tables.

Dimension tables identify the dimensions.

Primary keys in the dimension tables identify the base-level dimension members.

Parent columns in the dimension tables identify the higher level dimension members.

Columns in the dimension tables containing descriptions and characteristics of the dimension members identify the attributes.

You can also get insights into the dimensional model by looking at the sample reports included with Oracle Communications Data Model.

See:

Oracle Communications Data Model Installation Guide for more information on installing the sample reports and deploying the Oracle Communications Data Model RPD and webcat on the Business Intelligence Suite Enterprise Edition instance.Tip:

While investigating your source data, you may decide to create relational views that more closely match the dimensional model that you plan to create.Add materialized view capabilities to the OLAP cubes as described in "Adding Materialized View Capabilities".

See also:

Oracle OLAP User's Guide, "Defining New Oracle OLAP Cubes for Oracle Communications Data Model", and the sample reports in Oracle Communications Data Model Reference.Oracle by Example:

For more information on creating OLAP cubes, see the "Building OLAP 11g Cubes" OBE tutorial.To access the tutorial, open the Oracle Learning Library in your browser by following the instructions in "Oracle Technology Network"; and, then, search for the tutorial by name.

Common customizations to Oracle Communications Data Model cubes are changing the dimensions or the measures of the cube.

Since all Oracle Communications Data Model cubes load data from tables with the DWA_ prefix, to change the measures or dimensions of one cube, you must take the following steps:

Use the information in Oracle Communications Data Model Reference, to identify the DWA_ table from which the OLAP cube is populated.

Change the structure of the DWA_ table identified in Step 1.

Change the OLAP cube and cube materialized views to reflect the new structure.

To create a forecast cube for Oracle Communications Data Model:

Create a cube to contain the results of the forecast as described in "Defining New Oracle OLAP Cubes for Oracle Communications Data Model".

Note:

You cannot enable materialized views for an Oracle Communications Data Model forecast cube.Write an OLAP DML forecasting context program as described in Oracle OLAP DML Reference.

Partitioning is a method of physically storing the contents of a cube. It improves the performance of large cubes in the following ways:

Improves scalability by keeping data structures small. Each partition functions like a smaller measure.

Keeps the working set of data smaller both for queries and maintenance, since the relevant data is stored together.

Enables parallel aggregation during data maintenance. Each partition can be aggregated by a separate process.

Simplifies removal of old data from storage. Old partitions can be dropped, and new partitions can be added.

The number of partitions affects the database resources that can be allocated to loading and aggregating the data in a cube. Partitions can be aggregated simultaneously when sufficient resources have been allocated.

The Cube Partitioning Advisor analyzes the source tables and develops a partitioning strategy. You can accept the recommendations of the Cube Partitioning Advisor, or you can make your own decisions about partitioning.

If your partitioning strategy is driven primarily by life-cycle management considerations, then you should partition the cube on the Time dimension. Old time periods can then be dropped as a unit, and new time periods added as a new partition. The Cube Partitioning Advisor has a Time option, which recommends a hierarchy and a level in the Time dimension for partitioning.

The level on which to partition a cube is determined based on a trade off between load performance and query performance.

Typically, you do not want to partition on too low a level (for example, on the DAY level of a TIME dimension) because if you do then too many partitions must be defined at load time which slows down an initial or historical load. Also, a large number of partitions can result in unusually long Analytic Workspace attach times and slows down the Time Series-based calculations. Also, a Quarterly Cumulative measure (Quarter to Date Measure) needs to access 90 or 91 partitions to calculate a value for one Customer and Organization. All dimension members above the partition level of partition dimension (including those belonging to nondefault hierarchies) would be present in a single default template. Day level partitioning makes this very heavy since all higher level members are stored in default template. However, the advantage of partitioning DAY if the OLAP Cube load frequency is daily then there you must only load from a new partition in fact table into a single partition in the OLAP cube every day. This greatly improves the load performance since percentage-based refresh can be enabled if the cube is materialized-view enabled and has materialized-view logs.

Recommendations: Cube Partitioning Strategy

Usually a good compromise between the differing load and query performance requirements is to use an intermediate level like MONTH as the partition level. Time series calculations within a month (week to date, month to date, and so on) are fast and higher level calculations like year to date needs to refer to 12 partitions at most. Also this way the monthly partition is defined and created only one time (that is during the initial load on first of each month) and is then reused for each subsequent load that month. The aggregation process may be triggered off at the month level (instead of specific day level) and some redundant aggregations (of previously loaded dates of current month) may occur each time but it should result in satisfactory load and query performance.

See also:

"The discussion on choosing a partition strategy in Oracle OLAP User's Guide, "Indexes and Partitioned Indexes in the Oracle Communications Data Model", and "Partitioning and Materialized Views".While developing a dimensional model of your data, it is a good idea to map and load each object immediately after you create it so that you can immediately detect and correct any errors that you made to the object definition or the mapping.

However, in a production environment, you want to perform routine maintenance as quickly and easily as possible. For this stage, you can choose among data maintenance methods. You can refresh all cubes using the Maintenance Wizard. This wizard enables you to refresh a cube immediately, or submit the refresh as a job to the Oracle job queue, or generate a PL/SQL script. You can run the script manually or using a scheduling utility, such as Oracle Enterprise Manager Scheduler or the DBMS_SCHEDULER PL/SQL package. The generated script calls the BUILD procedure of the DBMS_CUBE PL/SQL package. You can modify this script or develop one from the start using this package.

The data for a partitioned cube is loaded and aggregated in parallel when multiple processes have been allocated to the build. You are able to see this in the build log.

In addition, each cube can support these data maintenance methods:

Custom cube scripts

Cube materialized views

If you are defining cubes to replace existing materialized views, then you use the materialized views as an integral part of data maintenance. Note, however, that materialized view capabilities restrict the types of analytics that can be performed by a custom cube script.

Oracle by Example:

See the following OBE tutorial for an example of how Oracle uses cube materialized views for transparent access to a relational star schema.:"Querying OLAP 11g Cubes"

To access the tutorial, open the Oracle Learning Library in your browser by following the instructions in "Oracle Technology Network"; and, then, search for the tutorial by name.

Materialized views are query results that have been stored or "materialized" in advance as schema objects. From a physical design point of view, materialized views resemble tables or partitioned tables and behave like indexes in that they are used transparently and can improve performance.

In the past, organizations using summaries spent a significant amount of time and effort creating summaries manually, identifying which summaries to create, indexing the summaries, updating them, and advising their users on which ones to use. With the advent of materialized views, a database administrator creates one or more materialized views, which are the equivalent of a summary. Thus, the workload of the database administrator is eased and the user no longer needed to be aware of the summaries that had been defined. Instead, the end user queries the tables and views at the detail data level. The query rewrite mechanism in the Oracle server automatically rewrites the SQL query to use the summary tables and reduces response time for returning results from the query.

Materialized views improve query performance by precalculating expensive join and aggregation operations on the database before executing and storing the results in the database. The query optimizer automatically recognizes when an existing materialized view can and should be used to satisfy a request.

The default Oracle Communications Data Model defines many materialized views. In the default ocdm_sys schema, you can identify these materialized views by looking at objects with the prefixes listed in the following table.

| Prefix | Description |

|---|---|

DWA_ |

Aggregate tables which are materialized views.

See: Aggregate tables in Oracle Communications Data Model Reference for a list of these objects in the default data model. "Aggregate Tables in the Oracle Communications Data Model" for more information on customizing these objects,. |

CB$ |

An OLAP cube enhanced with materialized view capabilities.

See: OLAP cube materialized views in Oracle Communications Data Model Reference for a list of these objects in the default data model. "Characteristics of the OLAP Cubes in Oracle Communications Data Model" for information on OLAP cubes. Note: Do |

DMV_ |

Materialized views created for performance reasons (that is, not an aggregate table or a cube materialized view).

See: Oracle Communications Data Model Reference to identify these objects in the default data model. |

The following topics provide more information on using and creating materialized views in your customized Oracle Communications Data Model:

Refresh option vary by the type of materialized view:

See:

Oracle OLAP User's Guide for a discussion of creating materialized views of Oracle OLAP cubes.In data warehouses, materialized views normally contain aggregates. The DWA_ tables in the default Oracle Communications Data Model are this type of materialized view.

For a materialized view with aggregates, for fast refresh to be possible:

The SELECT list must contain all of the GROUP BY columns (if present).

There must be a COUNT(*) and a COUNT(column) on any aggregated columns.

Materialized view logs must be present on all tables referenced in the query that defines the materialized view. The valid aggregate functions are: SUM, COUNT(x), COUNT(*), AVG, VARIANCE, STDDEV, MIN, and MAX, and the expression to be aggregated can be any SQL value expression.

Fast refresh for a materialized view containing joins and aggregates is possible after any type of DML to the base tables (direct load or conventional INSERT, UPDATE, or DELETE).

You can define that the materialized view be refreshed ON COMMIT or ON DEMAND. A REFRESH ON COMMIT materialized view is automatically refreshed when a transaction that does DML to a materialized view's detail tables commits.

When you specify REFRESH ON COMMIT, the table commit can take more time than if you have not. This is because the refresh operation is performed as part of the commit process. Therefore, this method may not be suitable if many users are concurrently changing the tables upon which the materialized view is based.

Some materialized views contain only joins and no aggregates (for example, when a materialized view is created that joins the sales table to the times and customers tables). The advantage of creating this type of materialized view is that expensive joins are precalculated.

Fast refresh for a materialized view containing only joins is possible after any type of DML to the base tables (direct-path or conventional INSERT, UPDATE, or DELETE).

A materialized view containing only joins can be defined to be refreshed ON COMMIT or ON DEMAND. If it is ON COMMIT, the refresh is performed at commit time of the transaction that does DML on the materialized view's detail table.

If you specify REFRESH FAST, Oracle Database performs further verification of the query definition to ensure that fast refresh can be performed if any of the detail tables change. These additional checks are:

A materialized view log must be present for each detail table unless the table supports partition change tracking. Also, when a materialized view log is required, the ROWID column must be present in each materialized view log.

The rowids of all the detail tables must appear in the SELECT list of the materialized view query definition.

If some of these restrictions are not met, you can create the materialized view as REFRESH FORCE to take advantage of fast refresh when it is possible. If one table does not meet all of the criteria, but the other tables do the materialized view is still fast refreshable with respect to the other tables for which all the criteria are met.

To achieve an optimally efficient refresh:

Ensure that the defining query does not use an outer join that behaves like an inner join. If the defining query contains such a join, consider rewriting the defining query to contain an inner join.

If the materialized view contains only joins, the ROWID columns for each table (and each instance of a table that occurs multiple times in the FROM list) must be present in the SELECT list of the materialized view.

If the materialized view has remote tables in the FROM clause, all tables in the FROM clause must be located on that same site. Further, ON COMMIT refresh is not supported for materialized view with remote tables. Except for SCN-based materialized view logs, materialized view logs must be present on the remote site for each detail table of the materialized view and ROWID columns must be present in the SELECT list of the materialized view.

A nested materialized view is a materialized view whose definition is based on another materialized view. A nested materialized view can reference other relations in the database in addition to referencing materialized views.

In a data warehouse, you typically create many aggregate views on a single join (for example, rollups along different dimensions). Incrementally maintaining these distinct materialized aggregate views can take a long time, because the underlying join has to be performed many times.

Using nested materialized views, you can create multiple single-table materialized views based on a joins-only materialized view and the join is performed just one time. In addition, optimizations can be performed for this class of single-table aggregate materialized view and thus refresh is very efficient.

Some types of nested materialized views cannot be fast refreshed. Use EXPLAIN_MVIEW to identify those types of materialized views.

You can refresh a tree of nested materialized views in the appropriate dependency order by specifying the nested =TRUE parameter with the DBMS_MVIEW.REFRESH parameter.

The two most common operations on a materialized view are query execution and fast refresh, and each operation has different performance requirements:

Query execution might need to access any subset of the materialized view key columns, and might need to join and aggregate over a subset of those columns. Consequently, for best performance, create a single-column bitmap index on each materialized view key column.

In the case of materialized views containing only joins using fast refresh, create indexes on the columns that contain the rowids to improve the performance of the refresh operation.

If a materialized view using aggregates is fast refreshable, then an index appropriate for the fast refresh procedure is created unless USING NO INDEX is specified in the CREATE MATERIALIZED VIEW statement.

Because of the large volume of data held in a data warehouse, partitioning is an extremely useful option when designing a database. Partitioning the fact tables improves scalability, simplifies system administration, and makes it possible to define local indexes that can be efficiently rebuilt. Partitioning the fact tables also improves the opportunity of fast refreshing the materialized view because this may enable partition change tracking refresh on the materialized view.

Partitioning a materialized view has the same benefits as partitioning fact tables. When a materialized view is partitioned a refresh procedure can use parallel DML in more scenarios and partition change tracking-based refresh can use truncate partition to efficiently maintain the materialized view.

See also:

Oracle Database VLDB and Partitioning Guide, "Partitioned Tables in the Oracle Communications Data Model", "Indexes and Partitioned Indexes in the Oracle Communications Data Model", and "Choosing a Cube Partitioning Strategy for Oracle Communications Data Model"Using Partition Change Tracking

It is possible and advantageous to track freshness to a finer grain than the entire materialized view. The ability to identify which rows in a materialized view are affected by a certain detail table partition, is known as partition change tracking. When one or more of the detail tables are partitioned, it may be possible to identify the specific rows in the materialized view that correspond to a modified detail partition(s). those rows become stale when a partition is modified while all other rows remain fresh.

You can use partition change tracking to identify which materialized view rows correspond to a particular partition. Partition change tracking is also used to support fast refresh after partition maintenance operations on detail tables. For instance, if a detail table partition is truncated or dropped, the affected rows in the materialized view are identified and deleted. Identifying which materialized view rows are fresh or stale, rather than considering the entire materialized view as stale, allows query rewrite to use those rows that refresh while in QUERY_REWRITE_INTEGRITY = ENFORCED or TRUSTED modes.

Several views, such as DBA_MVIEW_DETAIL_PARTITION, detail which partitions are stale or fresh. Oracle does not rewrite against partial stale materialized views if partition change tracking on the changed table is enabled by the presence of join dependent expression in the materialized view.

To support partition change tracking, a materialized view must satisfy the following requirements:

At least one detail table referenced by the materialized view must be partitioned.

Partitioned tables must use either range, list or composite partitioning.

The top level partition key must consist of only a single column.

The materialized view must contain either the partition key column or a partition marker or ROWID or join dependent expression of the detail table.

If you use a GROUP BY clause, the partition key column or the partition marker or ROWID or join dependent expression must be present in the GROUP BY clause.

If you use an analytic window function or the MODEL clause, the partition key column or the partition marker or ROWID or join dependent expression must be present in their respective PARTITION BY subclauses.

Data modifications can only occur on the partitioned table. If partition change tracking refresh is being done for a table which has join dependent expression in the materialized view, then data modifications should not have occurred in any of the join dependent tables.

The COMPATIBILITY initialization parameter must be a minimum of 9.0.0.0.0.

Partition change tracking is not supported for a materialized view that refers to views, remote tables, or outer joins.

Using data compression for a materialized view brings you a additional dramatic performance improvement.

Consider data compression when using highly redundant data, such as tables with many foreign keys. In particular, likely candidates are materialized views created with the ROLLUP clause.